Here’s how to conduct a MANOVA in R:

# Sample dataset

data <- data.frame(

group = rep(c("A", "B", "C"), each = 10),

var1 = rnorm(30),

var2 = rnorm(30)

)

# Conducting MANOVA

manova_result <- manova(cbind(var1, var2) ~ group, data = data)

summary(manova_result)The output will indicate whether group differences exist across the dependent variables, providing a comprehensive view of your data. For a deeper understanding, grab “Data Science for Business: What You Need to Know about Data Mining and Data-Analytic Thinking”.

Factor Analysis

Exploratory Factor Analysis (EFA) is your go-to method for uncovering latent variables. It helps reduce data complexity by identifying underlying factors that explain observed correlations. EFA is particularly useful in surveys or tests where you want to understand the dimensions of a construct.

To perform EFA in R, you can use the factanal function. Here’s a simple guide:

# Sample data

data <- matrix(rnorm(100), ncol = 5)

# Conducting EFA

efa_result <- factanal(factors = 2, covmat = cov(data))

print(efa_result)This output will provide the factor loadings, helping you understand how your original variables relate to the underlying factors. Factor analysis is the key to simplifying complex data elegantly. To delve further into data mining techniques, consider “Data Mining with R: Learning with Case Studies”.

With these techniques, you’re well-equipped to tackle diverse statistical challenges using R. Whether it’s comparing means, understanding relationships, or uncovering hidden patterns, R has the tools to make your analysis engaging and insightful.

Troubleshooting and Best Practices in R

Using R can feel like trying to tame a wild beast at times. But fear not! Every great statistician has faced their share of hiccups. Here, we’ll tackle common issues beginners encounter and offer some best practices for coding in R.

Common Issues

- Package Installation Problems: One of the first hurdles is installing packages. Sometimes R throws tantrums when it can’t find a package. To fix this, ensure your R version is up-to-date. You can install a package using the command

install.packages("package_name"). If you face issues, try restarting R and reinstalling. - Data Import Errors: Importing data can also be tricky. If your data isn’t loading, check the file path. Use the

getwd()function to confirm your working directory. Remember, R is case-sensitive! - Syntax Errors: These pesky errors often arise from missing commas or parentheses. Double-check your code for typos. R will usually highlight the problematic line, making it easier to spot mistakes.

- Memory Limitations: Working with large datasets can lead to memory issues. Use the

gc()function to clear memory. If you still struggle, consider using data.table or dplyr for efficiency. - Graphing Woes: If your plots don’t appear, ensure you’ve loaded the correct library (like ggplot2). Sometimes, a simple restart of RStudio resolves mysterious plotting issues.

Best Practices for Efficient and Readable R Code

- Comment Your Code: Always add comments to explain your thought process. This practice not only helps others understand your work but also reminds you why you wrote something a few weeks later.

- Use Meaningful Variable Names: Avoid vague names like

xordata1. Instead, opt for descriptive names likeuser_age_dataorsales_by_quarter. This clarity is golden! - Organize Your Code: Break your code into logical sections using functions. This approach enhances readability and reusability. Plus, it makes debugging a breeze!

- Avoid Repeated Code: If you find yourself repeating code, consider wrapping it in a function. This practice reduces clutter and makes your script easier to maintain.

- Use Version Control: If you’re working on larger projects, use Git for version control. This way, you can track changes and collaborate with others without losing your mind.

- Test Your Code Frequently: Don’t wait until the end to test your code. Regularly run your scripts to catch errors early. It’s much easier to fix small issues than to untangle a mess later.

Implementing these best practices will streamline your coding experience. With a little patience and practice, navigating R will become second nature! Don’t forget to check out “R Programming for Data Science” for more insights!

Conclusion

Mastering statistics using R is like learning to ride a bike—you’ll wobble and fall at first, but soon, you’ll be cruising smoothly along. Understanding statistical concepts through R not only enhances your analytical skills but also opens doors to various career opportunities.

As we’ve seen, R is not just a tool; it’s a vibrant ecosystem steeped in community support. From basic functions to advanced techniques, R empowers you to tackle real-world problems with confidence. The excitement of seeing your data come to life through analysis is unmatched.

So, keep exploring the R environment! There’s always something new to learn. Embrace your curiosity, and don’t shy away from challenges. Every error message is a stepping stone toward becoming a proficient statistician. And for those looking to expand their knowledge, consider “R in Action: Data Analysis and Graphics with R”.

Dive into the world of statistics with R, and remember, practice makes perfect! Your statistical journey is just beginning, and who knows? You might just uncover the next big trend in data analysis!

FAQs

What is R, and why is it preferred for statistical analysis?

R is a programming language specifically designed for statistical computing and graphics. It’s like the Swiss Army knife for data analysis. Why do folks love it? First, it’s free! Open-source software means you can dive into the world of statistics without stretching your wallet. But wait, there’s more! R has a strong community backing it up. This vibrant ecosystem means you’ll never be alone. Whether you’re solving a data puzzle or trying to make sense of a tricky regression, countless resources are at your fingertips. Plus, R is incredibly versatile. It handles everything from basic statistics to complex machine learning algorithms. It’s like having a trusty sidekick on your analytical adventures.

How do I get started with R programming?

Jumping into R is easier than you might think! Begin by downloading R from the Comprehensive R Archive Network (CRAN). Choose your operating system, follow the instructions, and voilà! Next, install RStudio, the user-friendly interface that makes coding in R a breeze. Once you’re all set up, familiarize yourself with the RStudio layout. The top panel is where you’ll write your commands, while the console below displays the results. From here, start with simple commands like `2 + 2` to get a feel for the environment. As you gain confidence, explore creating data frames and vectors. Remember, practice is key! The more you play with R, the more comfortable you’ll become.

Can I use R for advanced statistical techniques?

Absolutely! R is like a high-tech toolbox filled with advanced statistical techniques. From correlation and regression to ANOVA and MANOVA, R has it all. Whether you’re analyzing complex datasets or running sophisticated models, R is up to the task. For instance, logistic regression lets you tackle binary outcomes, while multilevel models handle hierarchical data beautifully. With R, you can explore a wide array of statistical methods that can elevate your analysis and provide deeper insights into your data. So, if you’re ready to take your skills to the next level, R is your perfect partner.

Where can I find additional resources for learning R?

The learning journey doesn’t stop with installation! Numerous resources are available to help you master R. Start with online courses on platforms like Coursera and edX. They offer structured lessons, perfect for beginners and advanced users alike. Don’t forget about the power of books! “Discovering Statistics Using R” by Andy Field is a fantastic resource. Packed with humor and practical examples, it guides you through statistical concepts and R usage. Additionally, check out online forums like Stack Overflow and R-bloggers. These communities are goldmines of knowledge, where you can ask questions and exchange tips with fellow R enthusiasts. Happy learning!

Please let us know what you think about our content by leaving a comment down below!

Thank you for reading till here 🙂

All images from Pexels

To implement logistic regression in R, you can use the glm function. Here’s a quick example:

# Load necessary library

library(dplyr)

# Sample dataset

data <- data.frame(

outcome = c(0, 1, 0, 1, 1, 0, 1, 0),

predictor = c(23, 45, 35, 50, 40, 22, 34, 39)

)

# Fit logistic regression

logistic_model <- glm(outcome ~ predictor, family = binomial, data = data)

summary(logistic_model)This code will give you the odds ratios, helping you interpret how changes in predictor variables affect the likelihood of the outcome. For those diving deeper into R programming, consider “Advanced R” by Hadley Wickham.

Multivariate Analysis

Multivariate analysis of variance (MANOVA) is a powerful tool for analyzing multiple dependent variables simultaneously. It’s like hosting a party where everyone gets to mingle, instead of having each variable isolated in a corner. MANOVA helps determine if your independent variables significantly affect the combination of dependent variables.

Here’s how to conduct a MANOVA in R:

# Sample dataset

data <- data.frame(

group = rep(c("A", "B", "C"), each = 10),

var1 = rnorm(30),

var2 = rnorm(30)

)

# Conducting MANOVA

manova_result <- manova(cbind(var1, var2) ~ group, data = data)

summary(manova_result)The output will indicate whether group differences exist across the dependent variables, providing a comprehensive view of your data. For a deeper understanding, grab “Data Science for Business: What You Need to Know about Data Mining and Data-Analytic Thinking”.

Factor Analysis

Exploratory Factor Analysis (EFA) is your go-to method for uncovering latent variables. It helps reduce data complexity by identifying underlying factors that explain observed correlations. EFA is particularly useful in surveys or tests where you want to understand the dimensions of a construct.

To perform EFA in R, you can use the factanal function. Here’s a simple guide:

# Sample data

data <- matrix(rnorm(100), ncol = 5)

# Conducting EFA

efa_result <- factanal(factors = 2, covmat = cov(data))

print(efa_result)This output will provide the factor loadings, helping you understand how your original variables relate to the underlying factors. Factor analysis is the key to simplifying complex data elegantly. To delve further into data mining techniques, consider “Data Mining with R: Learning with Case Studies”.

With these techniques, you’re well-equipped to tackle diverse statistical challenges using R. Whether it’s comparing means, understanding relationships, or uncovering hidden patterns, R has the tools to make your analysis engaging and insightful.

Troubleshooting and Best Practices in R

Using R can feel like trying to tame a wild beast at times. But fear not! Every great statistician has faced their share of hiccups. Here, we’ll tackle common issues beginners encounter and offer some best practices for coding in R.

Common Issues

- Package Installation Problems: One of the first hurdles is installing packages. Sometimes R throws tantrums when it can’t find a package. To fix this, ensure your R version is up-to-date. You can install a package using the command

install.packages("package_name"). If you face issues, try restarting R and reinstalling. - Data Import Errors: Importing data can also be tricky. If your data isn’t loading, check the file path. Use the

getwd()function to confirm your working directory. Remember, R is case-sensitive! - Syntax Errors: These pesky errors often arise from missing commas or parentheses. Double-check your code for typos. R will usually highlight the problematic line, making it easier to spot mistakes.

- Memory Limitations: Working with large datasets can lead to memory issues. Use the

gc()function to clear memory. If you still struggle, consider using data.table or dplyr for efficiency. - Graphing Woes: If your plots don’t appear, ensure you’ve loaded the correct library (like ggplot2). Sometimes, a simple restart of RStudio resolves mysterious plotting issues.

Best Practices for Efficient and Readable R Code

- Comment Your Code: Always add comments to explain your thought process. This practice not only helps others understand your work but also reminds you why you wrote something a few weeks later.

- Use Meaningful Variable Names: Avoid vague names like

xordata1. Instead, opt for descriptive names likeuser_age_dataorsales_by_quarter. This clarity is golden! - Organize Your Code: Break your code into logical sections using functions. This approach enhances readability and reusability. Plus, it makes debugging a breeze!

- Avoid Repeated Code: If you find yourself repeating code, consider wrapping it in a function. This practice reduces clutter and makes your script easier to maintain.

- Use Version Control: If you’re working on larger projects, use Git for version control. This way, you can track changes and collaborate with others without losing your mind.

- Test Your Code Frequently: Don’t wait until the end to test your code. Regularly run your scripts to catch errors early. It’s much easier to fix small issues than to untangle a mess later.

Implementing these best practices will streamline your coding experience. With a little patience and practice, navigating R will become second nature! Don’t forget to check out “R Programming for Data Science” for more insights!

Conclusion

Mastering statistics using R is like learning to ride a bike—you’ll wobble and fall at first, but soon, you’ll be cruising smoothly along. Understanding statistical concepts through R not only enhances your analytical skills but also opens doors to various career opportunities.

As we’ve seen, R is not just a tool; it’s a vibrant ecosystem steeped in community support. From basic functions to advanced techniques, R empowers you to tackle real-world problems with confidence. The excitement of seeing your data come to life through analysis is unmatched.

So, keep exploring the R environment! There’s always something new to learn. Embrace your curiosity, and don’t shy away from challenges. Every error message is a stepping stone toward becoming a proficient statistician. And for those looking to expand their knowledge, consider “R in Action: Data Analysis and Graphics with R”.

Dive into the world of statistics with R, and remember, practice makes perfect! Your statistical journey is just beginning, and who knows? You might just uncover the next big trend in data analysis!

FAQs

Please let us know what you think about our content by leaving a comment down below!

Thank you for reading till here 🙂

All images from Pexels

Let’s say you want to compare the effectiveness of three different study techniques. Here’s how you can run the Kruskal-Wallis test in R:

# Create a sample dataset

study_data <- data.frame(

technique = rep(c("Technique A", "Technique B", "Technique C"), each = 10),

scores = c(rnorm(10, mean = 75), rnorm(10, mean = 80), rnorm(10, mean = 70))

)

# Conducting the Kruskal-Wallis test

kruskal_result <- kruskal.test(scores ~ technique, data = study_data)

kruskal_resultIf the p-value is low, it signals that at least one technique stands out. Non-parametric tests are your go-to when assumptions are fuzzy, ensuring you still get valuable insights. To learn more about these tests, check out “R Cookbook: Proven Recipes for Data Analysis, Statistics, and Graphics”.

Advanced Techniques

Logistic Regression

Logistic regression is your best buddy when dealing with binary outcomes. It helps you understand the relationship between a dependent variable and one or more independent variables. Think of it as the magic wand that predicts yes or no scenarios—like whether you’ll binge-watch another series or finally tackle that laundry pile.

To implement logistic regression in R, you can use the glm function. Here’s a quick example:

# Load necessary library

library(dplyr)

# Sample dataset

data <- data.frame(

outcome = c(0, 1, 0, 1, 1, 0, 1, 0),

predictor = c(23, 45, 35, 50, 40, 22, 34, 39)

)

# Fit logistic regression

logistic_model <- glm(outcome ~ predictor, family = binomial, data = data)

summary(logistic_model)This code will give you the odds ratios, helping you interpret how changes in predictor variables affect the likelihood of the outcome. For those diving deeper into R programming, consider “Advanced R” by Hadley Wickham.

Multivariate Analysis

Multivariate analysis of variance (MANOVA) is a powerful tool for analyzing multiple dependent variables simultaneously. It’s like hosting a party where everyone gets to mingle, instead of having each variable isolated in a corner. MANOVA helps determine if your independent variables significantly affect the combination of dependent variables.

Here’s how to conduct a MANOVA in R:

# Sample dataset

data <- data.frame(

group = rep(c("A", "B", "C"), each = 10),

var1 = rnorm(30),

var2 = rnorm(30)

)

# Conducting MANOVA

manova_result <- manova(cbind(var1, var2) ~ group, data = data)

summary(manova_result)The output will indicate whether group differences exist across the dependent variables, providing a comprehensive view of your data. For a deeper understanding, grab “Data Science for Business: What You Need to Know about Data Mining and Data-Analytic Thinking”.

Factor Analysis

Exploratory Factor Analysis (EFA) is your go-to method for uncovering latent variables. It helps reduce data complexity by identifying underlying factors that explain observed correlations. EFA is particularly useful in surveys or tests where you want to understand the dimensions of a construct.

To perform EFA in R, you can use the factanal function. Here’s a simple guide:

# Sample data

data <- matrix(rnorm(100), ncol = 5)

# Conducting EFA

efa_result <- factanal(factors = 2, covmat = cov(data))

print(efa_result)This output will provide the factor loadings, helping you understand how your original variables relate to the underlying factors. Factor analysis is the key to simplifying complex data elegantly. To delve further into data mining techniques, consider “Data Mining with R: Learning with Case Studies”.

With these techniques, you’re well-equipped to tackle diverse statistical challenges using R. Whether it’s comparing means, understanding relationships, or uncovering hidden patterns, R has the tools to make your analysis engaging and insightful.

Troubleshooting and Best Practices in R

Using R can feel like trying to tame a wild beast at times. But fear not! Every great statistician has faced their share of hiccups. Here, we’ll tackle common issues beginners encounter and offer some best practices for coding in R.

Common Issues

- Package Installation Problems: One of the first hurdles is installing packages. Sometimes R throws tantrums when it can’t find a package. To fix this, ensure your R version is up-to-date. You can install a package using the command

install.packages("package_name"). If you face issues, try restarting R and reinstalling. - Data Import Errors: Importing data can also be tricky. If your data isn’t loading, check the file path. Use the

getwd()function to confirm your working directory. Remember, R is case-sensitive! - Syntax Errors: These pesky errors often arise from missing commas or parentheses. Double-check your code for typos. R will usually highlight the problematic line, making it easier to spot mistakes.

- Memory Limitations: Working with large datasets can lead to memory issues. Use the

gc()function to clear memory. If you still struggle, consider using data.table or dplyr for efficiency. - Graphing Woes: If your plots don’t appear, ensure you’ve loaded the correct library (like ggplot2). Sometimes, a simple restart of RStudio resolves mysterious plotting issues.

Best Practices for Efficient and Readable R Code

- Comment Your Code: Always add comments to explain your thought process. This practice not only helps others understand your work but also reminds you why you wrote something a few weeks later.

- Use Meaningful Variable Names: Avoid vague names like

xordata1. Instead, opt for descriptive names likeuser_age_dataorsales_by_quarter. This clarity is golden! - Organize Your Code: Break your code into logical sections using functions. This approach enhances readability and reusability. Plus, it makes debugging a breeze!

- Avoid Repeated Code: If you find yourself repeating code, consider wrapping it in a function. This practice reduces clutter and makes your script easier to maintain.

- Use Version Control: If you’re working on larger projects, use Git for version control. This way, you can track changes and collaborate with others without losing your mind.

- Test Your Code Frequently: Don’t wait until the end to test your code. Regularly run your scripts to catch errors early. It’s much easier to fix small issues than to untangle a mess later.

Implementing these best practices will streamline your coding experience. With a little patience and practice, navigating R will become second nature! Don’t forget to check out “R Programming for Data Science” for more insights!

Conclusion

Mastering statistics using R is like learning to ride a bike—you’ll wobble and fall at first, but soon, you’ll be cruising smoothly along. Understanding statistical concepts through R not only enhances your analytical skills but also opens doors to various career opportunities.

As we’ve seen, R is not just a tool; it’s a vibrant ecosystem steeped in community support. From basic functions to advanced techniques, R empowers you to tackle real-world problems with confidence. The excitement of seeing your data come to life through analysis is unmatched.

So, keep exploring the R environment! There’s always something new to learn. Embrace your curiosity, and don’t shy away from challenges. Every error message is a stepping stone toward becoming a proficient statistician. And for those looking to expand their knowledge, consider “R in Action: Data Analysis and Graphics with R”.

Dive into the world of statistics with R, and remember, practice makes perfect! Your statistical journey is just beginning, and who knows? You might just uncover the next big trend in data analysis!

FAQs

Please let us know what you think about our content by leaving a comment down below!

Thank you for reading till here 🙂

All images from Pexels

Now, let’s put ANOVA into action using R. First, you’ll need a dataset. For example, let’s say we want to analyze the effect of different diets on weight loss. Here’s how you can perform ANOVA:

# Load necessary library

library(dplyr)

# Create a sample dataset

data <- data.frame(

diet = rep(c("Diet A", "Diet B", "Diet C"), each = 10),

weight_loss = c(rnorm(10, mean = 5), rnorm(10, mean = 7), rnorm(10, mean = 6))

)

# Perform ANOVA

anova_result <- aov(weight_loss ~ diet, data = data)

summary(anova_result)After running this code, you’ll get a summary of the ANOVA results. Look for the p-value; if it’s less than 0.05, you can confidently say at least one diet led to significantly different weight loss. For a deeper dive into statistical concepts, consider “Practical Statistics for Data Scientists”.

Non-Parametric Tests

Non-parametric tests are your reliable friends when data doesn’t meet the assumptions of traditional tests. They don’t require your data to follow a specific distribution, making them handy for smaller samples or ordinal data. When should you use them? Think of situations where normality is questionable, like when your data looks more like a lopsided pancake than a bell curve.

Two popular non-parametric tests are the Wilcoxon signed-rank test and the Kruskal-Wallis test. The Wilcoxon test is great for comparing two related samples, while the Kruskal-Wallis test extends this to more than two independent groups.

Let’s say you want to compare the effectiveness of three different study techniques. Here’s how you can run the Kruskal-Wallis test in R:

# Create a sample dataset

study_data <- data.frame(

technique = rep(c("Technique A", "Technique B", "Technique C"), each = 10),

scores = c(rnorm(10, mean = 75), rnorm(10, mean = 80), rnorm(10, mean = 70))

)

# Conducting the Kruskal-Wallis test

kruskal_result <- kruskal.test(scores ~ technique, data = study_data)

kruskal_resultIf the p-value is low, it signals that at least one technique stands out. Non-parametric tests are your go-to when assumptions are fuzzy, ensuring you still get valuable insights. To learn more about these tests, check out “R Cookbook: Proven Recipes for Data Analysis, Statistics, and Graphics”.

Advanced Techniques

Logistic Regression

Logistic regression is your best buddy when dealing with binary outcomes. It helps you understand the relationship between a dependent variable and one or more independent variables. Think of it as the magic wand that predicts yes or no scenarios—like whether you’ll binge-watch another series or finally tackle that laundry pile.

To implement logistic regression in R, you can use the glm function. Here’s a quick example:

# Load necessary library

library(dplyr)

# Sample dataset

data <- data.frame(

outcome = c(0, 1, 0, 1, 1, 0, 1, 0),

predictor = c(23, 45, 35, 50, 40, 22, 34, 39)

)

# Fit logistic regression

logistic_model <- glm(outcome ~ predictor, family = binomial, data = data)

summary(logistic_model)This code will give you the odds ratios, helping you interpret how changes in predictor variables affect the likelihood of the outcome. For those diving deeper into R programming, consider “Advanced R” by Hadley Wickham.

Multivariate Analysis

Multivariate analysis of variance (MANOVA) is a powerful tool for analyzing multiple dependent variables simultaneously. It’s like hosting a party where everyone gets to mingle, instead of having each variable isolated in a corner. MANOVA helps determine if your independent variables significantly affect the combination of dependent variables.

Here’s how to conduct a MANOVA in R:

# Sample dataset

data <- data.frame(

group = rep(c("A", "B", "C"), each = 10),

var1 = rnorm(30),

var2 = rnorm(30)

)

# Conducting MANOVA

manova_result <- manova(cbind(var1, var2) ~ group, data = data)

summary(manova_result)The output will indicate whether group differences exist across the dependent variables, providing a comprehensive view of your data. For a deeper understanding, grab “Data Science for Business: What You Need to Know about Data Mining and Data-Analytic Thinking”.

Factor Analysis

Exploratory Factor Analysis (EFA) is your go-to method for uncovering latent variables. It helps reduce data complexity by identifying underlying factors that explain observed correlations. EFA is particularly useful in surveys or tests where you want to understand the dimensions of a construct.

To perform EFA in R, you can use the factanal function. Here’s a simple guide:

# Sample data

data <- matrix(rnorm(100), ncol = 5)

# Conducting EFA

efa_result <- factanal(factors = 2, covmat = cov(data))

print(efa_result)This output will provide the factor loadings, helping you understand how your original variables relate to the underlying factors. Factor analysis is the key to simplifying complex data elegantly. To delve further into data mining techniques, consider “Data Mining with R: Learning with Case Studies”.

With these techniques, you’re well-equipped to tackle diverse statistical challenges using R. Whether it’s comparing means, understanding relationships, or uncovering hidden patterns, R has the tools to make your analysis engaging and insightful.

Troubleshooting and Best Practices in R

Using R can feel like trying to tame a wild beast at times. But fear not! Every great statistician has faced their share of hiccups. Here, we’ll tackle common issues beginners encounter and offer some best practices for coding in R.

Common Issues

- Package Installation Problems: One of the first hurdles is installing packages. Sometimes R throws tantrums when it can’t find a package. To fix this, ensure your R version is up-to-date. You can install a package using the command

install.packages("package_name"). If you face issues, try restarting R and reinstalling. - Data Import Errors: Importing data can also be tricky. If your data isn’t loading, check the file path. Use the

getwd()function to confirm your working directory. Remember, R is case-sensitive! - Syntax Errors: These pesky errors often arise from missing commas or parentheses. Double-check your code for typos. R will usually highlight the problematic line, making it easier to spot mistakes.

- Memory Limitations: Working with large datasets can lead to memory issues. Use the

gc()function to clear memory. If you still struggle, consider using data.table or dplyr for efficiency. - Graphing Woes: If your plots don’t appear, ensure you’ve loaded the correct library (like ggplot2). Sometimes, a simple restart of RStudio resolves mysterious plotting issues.

Best Practices for Efficient and Readable R Code

- Comment Your Code: Always add comments to explain your thought process. This practice not only helps others understand your work but also reminds you why you wrote something a few weeks later.

- Use Meaningful Variable Names: Avoid vague names like

xordata1. Instead, opt for descriptive names likeuser_age_dataorsales_by_quarter. This clarity is golden! - Organize Your Code: Break your code into logical sections using functions. This approach enhances readability and reusability. Plus, it makes debugging a breeze!

- Avoid Repeated Code: If you find yourself repeating code, consider wrapping it in a function. This practice reduces clutter and makes your script easier to maintain.

- Use Version Control: If you’re working on larger projects, use Git for version control. This way, you can track changes and collaborate with others without losing your mind.

- Test Your Code Frequently: Don’t wait until the end to test your code. Regularly run your scripts to catch errors early. It’s much easier to fix small issues than to untangle a mess later.

Implementing these best practices will streamline your coding experience. With a little patience and practice, navigating R will become second nature! Don’t forget to check out “R Programming for Data Science” for more insights!

Conclusion

Mastering statistics using R is like learning to ride a bike—you’ll wobble and fall at first, but soon, you’ll be cruising smoothly along. Understanding statistical concepts through R not only enhances your analytical skills but also opens doors to various career opportunities.

As we’ve seen, R is not just a tool; it’s a vibrant ecosystem steeped in community support. From basic functions to advanced techniques, R empowers you to tackle real-world problems with confidence. The excitement of seeing your data come to life through analysis is unmatched.

So, keep exploring the R environment! There’s always something new to learn. Embrace your curiosity, and don’t shy away from challenges. Every error message is a stepping stone toward becoming a proficient statistician. And for those looking to expand their knowledge, consider “R in Action: Data Analysis and Graphics with R”.

Dive into the world of statistics with R, and remember, practice makes perfect! Your statistical journey is just beginning, and who knows? You might just uncover the next big trend in data analysis!

FAQs

Please let us know what you think about our content by leaving a comment down below!

Thank you for reading till here 🙂

All images from Pexels

- Colors: Use distinct colors to differentiate data points. You can map colors to categories using:

ggplot(data = my_data, aes(x = variable1, y = variable2, color = category)) +

geom_point()ggplot(data = my_data, aes(x = variable1, y = variable2)) +

geom_point() +

labs(title = "My Scatter Plot", x = "Variable 1", y = "Variable 2")theme_minimal() for a clean, modern look:ggplot(data = my_data, aes(x = variable1, y = variable2)) +

geom_point() +

theme_minimal()With these customization techniques, your visualizations will not only convey information but also captivate your audience. So go ahead, unleash your creativity! Visualizing data is not just about aesthetics; it’s about telling compelling stories that resonate. If you’re looking for practical insights on visualization, grab “Data Visualization: A Practical Introduction”.

ANOVA (Analysis of Variance)

ANOVA is your go-to when you want to compare means across three or more groups. Think of it as the referee in a statistical boxing match. Instead of running multiple t-tests, which could lead to a false sense of victory, ANOVA keeps everything fair and square. There are several types of ANOVA: one-way, two-way, and repeated measures, each serving its unique purpose depending on your data structure.

One-way ANOVA tests one independent variable, while two-way ANOVA considers two. Repeated measures ANOVA is the heavyweight champion, analyzing data collected from the same subjects over time. It’s ideal for studies that require tracking changes, like how people’s shoe sizes evolve after years of wearing flip-flops.

Now, let’s put ANOVA into action using R. First, you’ll need a dataset. For example, let’s say we want to analyze the effect of different diets on weight loss. Here’s how you can perform ANOVA:

# Load necessary library

library(dplyr)

# Create a sample dataset

data <- data.frame(

diet = rep(c("Diet A", "Diet B", "Diet C"), each = 10),

weight_loss = c(rnorm(10, mean = 5), rnorm(10, mean = 7), rnorm(10, mean = 6))

)

# Perform ANOVA

anova_result <- aov(weight_loss ~ diet, data = data)

summary(anova_result)After running this code, you’ll get a summary of the ANOVA results. Look for the p-value; if it’s less than 0.05, you can confidently say at least one diet led to significantly different weight loss. For a deeper dive into statistical concepts, consider “Practical Statistics for Data Scientists”.

Non-Parametric Tests

Non-parametric tests are your reliable friends when data doesn’t meet the assumptions of traditional tests. They don’t require your data to follow a specific distribution, making them handy for smaller samples or ordinal data. When should you use them? Think of situations where normality is questionable, like when your data looks more like a lopsided pancake than a bell curve.

Two popular non-parametric tests are the Wilcoxon signed-rank test and the Kruskal-Wallis test. The Wilcoxon test is great for comparing two related samples, while the Kruskal-Wallis test extends this to more than two independent groups.

Let’s say you want to compare the effectiveness of three different study techniques. Here’s how you can run the Kruskal-Wallis test in R:

# Create a sample dataset

study_data <- data.frame(

technique = rep(c("Technique A", "Technique B", "Technique C"), each = 10),

scores = c(rnorm(10, mean = 75), rnorm(10, mean = 80), rnorm(10, mean = 70))

)

# Conducting the Kruskal-Wallis test

kruskal_result <- kruskal.test(scores ~ technique, data = study_data)

kruskal_resultIf the p-value is low, it signals that at least one technique stands out. Non-parametric tests are your go-to when assumptions are fuzzy, ensuring you still get valuable insights. To learn more about these tests, check out “R Cookbook: Proven Recipes for Data Analysis, Statistics, and Graphics”.

Advanced Techniques

Logistic Regression

Logistic regression is your best buddy when dealing with binary outcomes. It helps you understand the relationship between a dependent variable and one or more independent variables. Think of it as the magic wand that predicts yes or no scenarios—like whether you’ll binge-watch another series or finally tackle that laundry pile.

To implement logistic regression in R, you can use the glm function. Here’s a quick example:

# Load necessary library

library(dplyr)

# Sample dataset

data <- data.frame(

outcome = c(0, 1, 0, 1, 1, 0, 1, 0),

predictor = c(23, 45, 35, 50, 40, 22, 34, 39)

)

# Fit logistic regression

logistic_model <- glm(outcome ~ predictor, family = binomial, data = data)

summary(logistic_model)This code will give you the odds ratios, helping you interpret how changes in predictor variables affect the likelihood of the outcome. For those diving deeper into R programming, consider “Advanced R” by Hadley Wickham.

Multivariate Analysis

Multivariate analysis of variance (MANOVA) is a powerful tool for analyzing multiple dependent variables simultaneously. It’s like hosting a party where everyone gets to mingle, instead of having each variable isolated in a corner. MANOVA helps determine if your independent variables significantly affect the combination of dependent variables.

Here’s how to conduct a MANOVA in R:

# Sample dataset

data <- data.frame(

group = rep(c("A", "B", "C"), each = 10),

var1 = rnorm(30),

var2 = rnorm(30)

)

# Conducting MANOVA

manova_result <- manova(cbind(var1, var2) ~ group, data = data)

summary(manova_result)The output will indicate whether group differences exist across the dependent variables, providing a comprehensive view of your data. For a deeper understanding, grab “Data Science for Business: What You Need to Know about Data Mining and Data-Analytic Thinking”.

Factor Analysis

Exploratory Factor Analysis (EFA) is your go-to method for uncovering latent variables. It helps reduce data complexity by identifying underlying factors that explain observed correlations. EFA is particularly useful in surveys or tests where you want to understand the dimensions of a construct.

To perform EFA in R, you can use the factanal function. Here’s a simple guide:

# Sample data

data <- matrix(rnorm(100), ncol = 5)

# Conducting EFA

efa_result <- factanal(factors = 2, covmat = cov(data))

print(efa_result)This output will provide the factor loadings, helping you understand how your original variables relate to the underlying factors. Factor analysis is the key to simplifying complex data elegantly. To delve further into data mining techniques, consider “Data Mining with R: Learning with Case Studies”.

With these techniques, you’re well-equipped to tackle diverse statistical challenges using R. Whether it’s comparing means, understanding relationships, or uncovering hidden patterns, R has the tools to make your analysis engaging and insightful.

Troubleshooting and Best Practices in R

Using R can feel like trying to tame a wild beast at times. But fear not! Every great statistician has faced their share of hiccups. Here, we’ll tackle common issues beginners encounter and offer some best practices for coding in R.

Common Issues

- Package Installation Problems: One of the first hurdles is installing packages. Sometimes R throws tantrums when it can’t find a package. To fix this, ensure your R version is up-to-date. You can install a package using the command

install.packages("package_name"). If you face issues, try restarting R and reinstalling. - Data Import Errors: Importing data can also be tricky. If your data isn’t loading, check the file path. Use the

getwd()function to confirm your working directory. Remember, R is case-sensitive! - Syntax Errors: These pesky errors often arise from missing commas or parentheses. Double-check your code for typos. R will usually highlight the problematic line, making it easier to spot mistakes.

- Memory Limitations: Working with large datasets can lead to memory issues. Use the

gc()function to clear memory. If you still struggle, consider using data.table or dplyr for efficiency. - Graphing Woes: If your plots don’t appear, ensure you’ve loaded the correct library (like ggplot2). Sometimes, a simple restart of RStudio resolves mysterious plotting issues.

Best Practices for Efficient and Readable R Code

- Comment Your Code: Always add comments to explain your thought process. This practice not only helps others understand your work but also reminds you why you wrote something a few weeks later.

- Use Meaningful Variable Names: Avoid vague names like

xordata1. Instead, opt for descriptive names likeuser_age_dataorsales_by_quarter. This clarity is golden! - Organize Your Code: Break your code into logical sections using functions. This approach enhances readability and reusability. Plus, it makes debugging a breeze!

- Avoid Repeated Code: If you find yourself repeating code, consider wrapping it in a function. This practice reduces clutter and makes your script easier to maintain.

- Use Version Control: If you’re working on larger projects, use Git for version control. This way, you can track changes and collaborate with others without losing your mind.

- Test Your Code Frequently: Don’t wait until the end to test your code. Regularly run your scripts to catch errors early. It’s much easier to fix small issues than to untangle a mess later.

Implementing these best practices will streamline your coding experience. With a little patience and practice, navigating R will become second nature! Don’t forget to check out “R Programming for Data Science” for more insights!

Conclusion

Mastering statistics using R is like learning to ride a bike—you’ll wobble and fall at first, but soon, you’ll be cruising smoothly along. Understanding statistical concepts through R not only enhances your analytical skills but also opens doors to various career opportunities.

As we’ve seen, R is not just a tool; it’s a vibrant ecosystem steeped in community support. From basic functions to advanced techniques, R empowers you to tackle real-world problems with confidence. The excitement of seeing your data come to life through analysis is unmatched.

So, keep exploring the R environment! There’s always something new to learn. Embrace your curiosity, and don’t shy away from challenges. Every error message is a stepping stone toward becoming a proficient statistician. And for those looking to expand their knowledge, consider “R in Action: Data Analysis and Graphics with R”.

Dive into the world of statistics with R, and remember, practice makes perfect! Your statistical journey is just beginning, and who knows? You might just uncover the next big trend in data analysis!

FAQs

Please let us know what you think about our content by leaving a comment down below!

Thank you for reading till here 🙂

All images from Pexels

- Histograms: Great for visualizing the distribution of a single variable. Simple code:

ggplot(data = my_data, aes(x = variable)) +

geom_histogram(binwidth = 1)- Box Plots: Ideal for visualizing data spread and identifying outliers. Here’s a quick example:

ggplot(data = my_data, aes(x = factor(variable1), y = variable2)) +

geom_boxplot()With ggplot2, the possibilities are endless. You can create anything from straightforward to intricate plots with just a few lines of code.

Customizing Plots

Now that you have the basics down, let’s jazz up those plots! Customization is key to making your visualizations pop. Here are some tips to enhance your graphics:

- Colors: Use distinct colors to differentiate data points. You can map colors to categories using:

ggplot(data = my_data, aes(x = variable1, y = variable2, color = category)) +

geom_point()ggplot(data = my_data, aes(x = variable1, y = variable2)) +

geom_point() +

labs(title = "My Scatter Plot", x = "Variable 1", y = "Variable 2")theme_minimal() for a clean, modern look:ggplot(data = my_data, aes(x = variable1, y = variable2)) +

geom_point() +

theme_minimal()With these customization techniques, your visualizations will not only convey information but also captivate your audience. So go ahead, unleash your creativity! Visualizing data is not just about aesthetics; it’s about telling compelling stories that resonate. If you’re looking for practical insights on visualization, grab “Data Visualization: A Practical Introduction”.

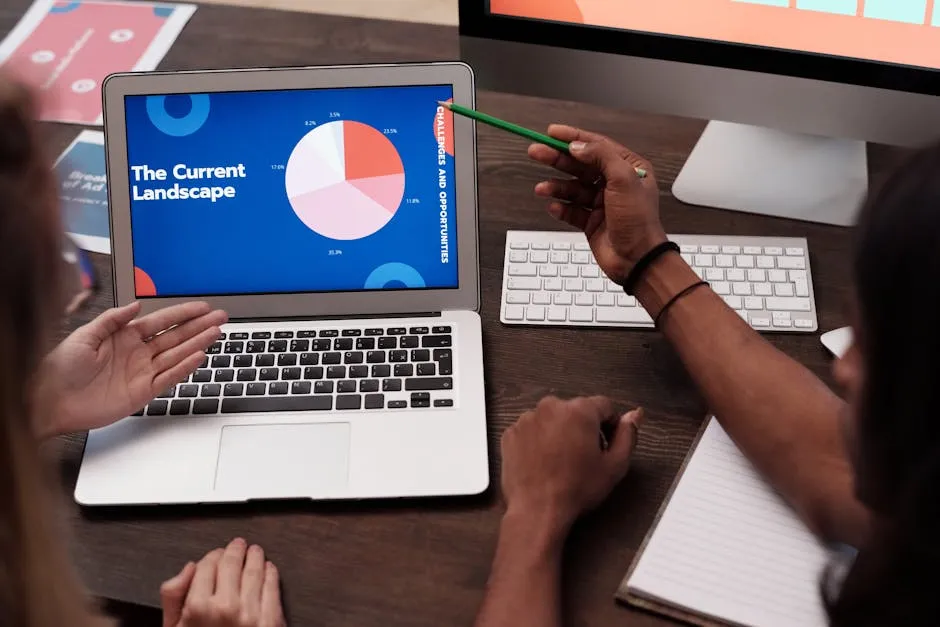

ANOVA (Analysis of Variance)

ANOVA is your go-to when you want to compare means across three or more groups. Think of it as the referee in a statistical boxing match. Instead of running multiple t-tests, which could lead to a false sense of victory, ANOVA keeps everything fair and square. There are several types of ANOVA: one-way, two-way, and repeated measures, each serving its unique purpose depending on your data structure.

One-way ANOVA tests one independent variable, while two-way ANOVA considers two. Repeated measures ANOVA is the heavyweight champion, analyzing data collected from the same subjects over time. It’s ideal for studies that require tracking changes, like how people’s shoe sizes evolve after years of wearing flip-flops.

Now, let’s put ANOVA into action using R. First, you’ll need a dataset. For example, let’s say we want to analyze the effect of different diets on weight loss. Here’s how you can perform ANOVA:

# Load necessary library

library(dplyr)

# Create a sample dataset

data <- data.frame(

diet = rep(c("Diet A", "Diet B", "Diet C"), each = 10),

weight_loss = c(rnorm(10, mean = 5), rnorm(10, mean = 7), rnorm(10, mean = 6))

)

# Perform ANOVA

anova_result <- aov(weight_loss ~ diet, data = data)

summary(anova_result)After running this code, you’ll get a summary of the ANOVA results. Look for the p-value; if it’s less than 0.05, you can confidently say at least one diet led to significantly different weight loss. For a deeper dive into statistical concepts, consider “Practical Statistics for Data Scientists”.

Non-Parametric Tests

Non-parametric tests are your reliable friends when data doesn’t meet the assumptions of traditional tests. They don’t require your data to follow a specific distribution, making them handy for smaller samples or ordinal data. When should you use them? Think of situations where normality is questionable, like when your data looks more like a lopsided pancake than a bell curve.

Two popular non-parametric tests are the Wilcoxon signed-rank test and the Kruskal-Wallis test. The Wilcoxon test is great for comparing two related samples, while the Kruskal-Wallis test extends this to more than two independent groups.

Let’s say you want to compare the effectiveness of three different study techniques. Here’s how you can run the Kruskal-Wallis test in R:

# Create a sample dataset

study_data <- data.frame(

technique = rep(c("Technique A", "Technique B", "Technique C"), each = 10),

scores = c(rnorm(10, mean = 75), rnorm(10, mean = 80), rnorm(10, mean = 70))

)

# Conducting the Kruskal-Wallis test

kruskal_result <- kruskal.test(scores ~ technique, data = study_data)

kruskal_resultIf the p-value is low, it signals that at least one technique stands out. Non-parametric tests are your go-to when assumptions are fuzzy, ensuring you still get valuable insights. To learn more about these tests, check out “R Cookbook: Proven Recipes for Data Analysis, Statistics, and Graphics”.

Advanced Techniques

Logistic Regression

Logistic regression is your best buddy when dealing with binary outcomes. It helps you understand the relationship between a dependent variable and one or more independent variables. Think of it as the magic wand that predicts yes or no scenarios—like whether you’ll binge-watch another series or finally tackle that laundry pile.

To implement logistic regression in R, you can use the glm function. Here’s a quick example:

# Load necessary library

library(dplyr)

# Sample dataset

data <- data.frame(

outcome = c(0, 1, 0, 1, 1, 0, 1, 0),

predictor = c(23, 45, 35, 50, 40, 22, 34, 39)

)

# Fit logistic regression

logistic_model <- glm(outcome ~ predictor, family = binomial, data = data)

summary(logistic_model)This code will give you the odds ratios, helping you interpret how changes in predictor variables affect the likelihood of the outcome. For those diving deeper into R programming, consider “Advanced R” by Hadley Wickham.

Multivariate Analysis

Multivariate analysis of variance (MANOVA) is a powerful tool for analyzing multiple dependent variables simultaneously. It’s like hosting a party where everyone gets to mingle, instead of having each variable isolated in a corner. MANOVA helps determine if your independent variables significantly affect the combination of dependent variables.

Here’s how to conduct a MANOVA in R:

# Sample dataset

data <- data.frame(

group = rep(c("A", "B", "C"), each = 10),

var1 = rnorm(30),

var2 = rnorm(30)

)

# Conducting MANOVA

manova_result <- manova(cbind(var1, var2) ~ group, data = data)

summary(manova_result)The output will indicate whether group differences exist across the dependent variables, providing a comprehensive view of your data. For a deeper understanding, grab “Data Science for Business: What You Need to Know about Data Mining and Data-Analytic Thinking”.

Factor Analysis

Exploratory Factor Analysis (EFA) is your go-to method for uncovering latent variables. It helps reduce data complexity by identifying underlying factors that explain observed correlations. EFA is particularly useful in surveys or tests where you want to understand the dimensions of a construct.

To perform EFA in R, you can use the factanal function. Here’s a simple guide:

# Sample data

data <- matrix(rnorm(100), ncol = 5)

# Conducting EFA

efa_result <- factanal(factors = 2, covmat = cov(data))

print(efa_result)This output will provide the factor loadings, helping you understand how your original variables relate to the underlying factors. Factor analysis is the key to simplifying complex data elegantly. To delve further into data mining techniques, consider “Data Mining with R: Learning with Case Studies”.

With these techniques, you’re well-equipped to tackle diverse statistical challenges using R. Whether it’s comparing means, understanding relationships, or uncovering hidden patterns, R has the tools to make your analysis engaging and insightful.

Troubleshooting and Best Practices in R

Using R can feel like trying to tame a wild beast at times. But fear not! Every great statistician has faced their share of hiccups. Here, we’ll tackle common issues beginners encounter and offer some best practices for coding in R.

Common Issues

- Package Installation Problems: One of the first hurdles is installing packages. Sometimes R throws tantrums when it can’t find a package. To fix this, ensure your R version is up-to-date. You can install a package using the command

install.packages("package_name"). If you face issues, try restarting R and reinstalling. - Data Import Errors: Importing data can also be tricky. If your data isn’t loading, check the file path. Use the

getwd()function to confirm your working directory. Remember, R is case-sensitive! - Syntax Errors: These pesky errors often arise from missing commas or parentheses. Double-check your code for typos. R will usually highlight the problematic line, making it easier to spot mistakes.

- Memory Limitations: Working with large datasets can lead to memory issues. Use the

gc()function to clear memory. If you still struggle, consider using data.table or dplyr for efficiency. - Graphing Woes: If your plots don’t appear, ensure you’ve loaded the correct library (like ggplot2). Sometimes, a simple restart of RStudio resolves mysterious plotting issues.

Best Practices for Efficient and Readable R Code

- Comment Your Code: Always add comments to explain your thought process. This practice not only helps others understand your work but also reminds you why you wrote something a few weeks later.

- Use Meaningful Variable Names: Avoid vague names like

xordata1. Instead, opt for descriptive names likeuser_age_dataorsales_by_quarter. This clarity is golden! - Organize Your Code: Break your code into logical sections using functions. This approach enhances readability and reusability. Plus, it makes debugging a breeze!

- Avoid Repeated Code: If you find yourself repeating code, consider wrapping it in a function. This practice reduces clutter and makes your script easier to maintain.

- Use Version Control: If you’re working on larger projects, use Git for version control. This way, you can track changes and collaborate with others without losing your mind.

- Test Your Code Frequently: Don’t wait until the end to test your code. Regularly run your scripts to catch errors early. It’s much easier to fix small issues than to untangle a mess later.

Implementing these best practices will streamline your coding experience. With a little patience and practice, navigating R will become second nature! Don’t forget to check out “R Programming for Data Science” for more insights!

Conclusion

Mastering statistics using R is like learning to ride a bike—you’ll wobble and fall at first, but soon, you’ll be cruising smoothly along. Understanding statistical concepts through R not only enhances your analytical skills but also opens doors to various career opportunities.

As we’ve seen, R is not just a tool; it’s a vibrant ecosystem steeped in community support. From basic functions to advanced techniques, R empowers you to tackle real-world problems with confidence. The excitement of seeing your data come to life through analysis is unmatched.

So, keep exploring the R environment! There’s always something new to learn. Embrace your curiosity, and don’t shy away from challenges. Every error message is a stepping stone toward becoming a proficient statistician. And for those looking to expand their knowledge, consider “R in Action: Data Analysis and Graphics with R”.

Dive into the world of statistics with R, and remember, practice makes perfect! Your statistical journey is just beginning, and who knows? You might just uncover the next big trend in data analysis!

FAQs

Please let us know what you think about our content by leaving a comment down below!

Thank you for reading till here 🙂

All images from Pexels

- Scatter Plots: These are perfect for showing relationships between two variables. Here’s how to make one:

ggplot(data = my_data, aes(x = variable1, y = variable2)) +

geom_point()- Histograms: Great for visualizing the distribution of a single variable. Simple code:

ggplot(data = my_data, aes(x = variable)) +

geom_histogram(binwidth = 1)- Box Plots: Ideal for visualizing data spread and identifying outliers. Here’s a quick example:

ggplot(data = my_data, aes(x = factor(variable1), y = variable2)) +

geom_boxplot()With ggplot2, the possibilities are endless. You can create anything from straightforward to intricate plots with just a few lines of code.

Customizing Plots

Now that you have the basics down, let’s jazz up those plots! Customization is key to making your visualizations pop. Here are some tips to enhance your graphics:

- Colors: Use distinct colors to differentiate data points. You can map colors to categories using:

ggplot(data = my_data, aes(x = variable1, y = variable2, color = category)) +

geom_point()ggplot(data = my_data, aes(x = variable1, y = variable2)) +

geom_point() +

labs(title = "My Scatter Plot", x = "Variable 1", y = "Variable 2")theme_minimal() for a clean, modern look:ggplot(data = my_data, aes(x = variable1, y = variable2)) +

geom_point() +

theme_minimal()With these customization techniques, your visualizations will not only convey information but also captivate your audience. So go ahead, unleash your creativity! Visualizing data is not just about aesthetics; it’s about telling compelling stories that resonate. If you’re looking for practical insights on visualization, grab “Data Visualization: A Practical Introduction”.

ANOVA (Analysis of Variance)

ANOVA is your go-to when you want to compare means across three or more groups. Think of it as the referee in a statistical boxing match. Instead of running multiple t-tests, which could lead to a false sense of victory, ANOVA keeps everything fair and square. There are several types of ANOVA: one-way, two-way, and repeated measures, each serving its unique purpose depending on your data structure.

One-way ANOVA tests one independent variable, while two-way ANOVA considers two. Repeated measures ANOVA is the heavyweight champion, analyzing data collected from the same subjects over time. It’s ideal for studies that require tracking changes, like how people’s shoe sizes evolve after years of wearing flip-flops.

Now, let’s put ANOVA into action using R. First, you’ll need a dataset. For example, let’s say we want to analyze the effect of different diets on weight loss. Here’s how you can perform ANOVA:

# Load necessary library

library(dplyr)

# Create a sample dataset

data <- data.frame(

diet = rep(c("Diet A", "Diet B", "Diet C"), each = 10),

weight_loss = c(rnorm(10, mean = 5), rnorm(10, mean = 7), rnorm(10, mean = 6))

)

# Perform ANOVA

anova_result <- aov(weight_loss ~ diet, data = data)

summary(anova_result)After running this code, you’ll get a summary of the ANOVA results. Look for the p-value; if it’s less than 0.05, you can confidently say at least one diet led to significantly different weight loss. For a deeper dive into statistical concepts, consider “Practical Statistics for Data Scientists”.

Non-Parametric Tests

Non-parametric tests are your reliable friends when data doesn’t meet the assumptions of traditional tests. They don’t require your data to follow a specific distribution, making them handy for smaller samples or ordinal data. When should you use them? Think of situations where normality is questionable, like when your data looks more like a lopsided pancake than a bell curve.

Two popular non-parametric tests are the Wilcoxon signed-rank test and the Kruskal-Wallis test. The Wilcoxon test is great for comparing two related samples, while the Kruskal-Wallis test extends this to more than two independent groups.

Let’s say you want to compare the effectiveness of three different study techniques. Here’s how you can run the Kruskal-Wallis test in R:

# Create a sample dataset

study_data <- data.frame(

technique = rep(c("Technique A", "Technique B", "Technique C"), each = 10),

scores = c(rnorm(10, mean = 75), rnorm(10, mean = 80), rnorm(10, mean = 70))

)

# Conducting the Kruskal-Wallis test

kruskal_result <- kruskal.test(scores ~ technique, data = study_data)

kruskal_resultIf the p-value is low, it signals that at least one technique stands out. Non-parametric tests are your go-to when assumptions are fuzzy, ensuring you still get valuable insights. To learn more about these tests, check out “R Cookbook: Proven Recipes for Data Analysis, Statistics, and Graphics”.

Advanced Techniques

Logistic Regression

Logistic regression is your best buddy when dealing with binary outcomes. It helps you understand the relationship between a dependent variable and one or more independent variables. Think of it as the magic wand that predicts yes or no scenarios—like whether you’ll binge-watch another series or finally tackle that laundry pile.

To implement logistic regression in R, you can use the glm function. Here’s a quick example:

# Load necessary library

library(dplyr)

# Sample dataset

data <- data.frame(

outcome = c(0, 1, 0, 1, 1, 0, 1, 0),

predictor = c(23, 45, 35, 50, 40, 22, 34, 39)

)

# Fit logistic regression

logistic_model <- glm(outcome ~ predictor, family = binomial, data = data)

summary(logistic_model)This code will give you the odds ratios, helping you interpret how changes in predictor variables affect the likelihood of the outcome. For those diving deeper into R programming, consider “Advanced R” by Hadley Wickham.

Multivariate Analysis

Multivariate analysis of variance (MANOVA) is a powerful tool for analyzing multiple dependent variables simultaneously. It’s like hosting a party where everyone gets to mingle, instead of having each variable isolated in a corner. MANOVA helps determine if your independent variables significantly affect the combination of dependent variables.

Here’s how to conduct a MANOVA in R:

# Sample dataset

data <- data.frame(

group = rep(c("A", "B", "C"), each = 10),

var1 = rnorm(30),

var2 = rnorm(30)

)

# Conducting MANOVA

manova_result <- manova(cbind(var1, var2) ~ group, data = data)

summary(manova_result)The output will indicate whether group differences exist across the dependent variables, providing a comprehensive view of your data. For a deeper understanding, grab “Data Science for Business: What You Need to Know about Data Mining and Data-Analytic Thinking”.

Factor Analysis

Exploratory Factor Analysis (EFA) is your go-to method for uncovering latent variables. It helps reduce data complexity by identifying underlying factors that explain observed correlations. EFA is particularly useful in surveys or tests where you want to understand the dimensions of a construct.

To perform EFA in R, you can use the factanal function. Here’s a simple guide:

# Sample data

data <- matrix(rnorm(100), ncol = 5)

# Conducting EFA

efa_result <- factanal(factors = 2, covmat = cov(data))

print(efa_result)This output will provide the factor loadings, helping you understand how your original variables relate to the underlying factors. Factor analysis is the key to simplifying complex data elegantly. To delve further into data mining techniques, consider “Data Mining with R: Learning with Case Studies”.

With these techniques, you’re well-equipped to tackle diverse statistical challenges using R. Whether it’s comparing means, understanding relationships, or uncovering hidden patterns, R has the tools to make your analysis engaging and insightful.

Troubleshooting and Best Practices in R

Using R can feel like trying to tame a wild beast at times. But fear not! Every great statistician has faced their share of hiccups. Here, we’ll tackle common issues beginners encounter and offer some best practices for coding in R.

Common Issues

- Package Installation Problems: One of the first hurdles is installing packages. Sometimes R throws tantrums when it can’t find a package. To fix this, ensure your R version is up-to-date. You can install a package using the command

install.packages("package_name"). If you face issues, try restarting R and reinstalling. - Data Import Errors: Importing data can also be tricky. If your data isn’t loading, check the file path. Use the

getwd()function to confirm your working directory. Remember, R is case-sensitive! - Syntax Errors: These pesky errors often arise from missing commas or parentheses. Double-check your code for typos. R will usually highlight the problematic line, making it easier to spot mistakes.

- Memory Limitations: Working with large datasets can lead to memory issues. Use the

gc()function to clear memory. If you still struggle, consider using data.table or dplyr for efficiency. - Graphing Woes: If your plots don’t appear, ensure you’ve loaded the correct library (like ggplot2). Sometimes, a simple restart of RStudio resolves mysterious plotting issues.

Best Practices for Efficient and Readable R Code

- Comment Your Code: Always add comments to explain your thought process. This practice not only helps others understand your work but also reminds you why you wrote something a few weeks later.

- Use Meaningful Variable Names: Avoid vague names like

xordata1. Instead, opt for descriptive names likeuser_age_dataorsales_by_quarter. This clarity is golden! - Organize Your Code: Break your code into logical sections using functions. This approach enhances readability and reusability. Plus, it makes debugging a breeze!

- Avoid Repeated Code: If you find yourself repeating code, consider wrapping it in a function. This practice reduces clutter and makes your script easier to maintain.

- Use Version Control: If you’re working on larger projects, use Git for version control. This way, you can track changes and collaborate with others without losing your mind.

- Test Your Code Frequently: Don’t wait until the end to test your code. Regularly run your scripts to catch errors early. It’s much easier to fix small issues than to untangle a mess later.

Implementing these best practices will streamline your coding experience. With a little patience and practice, navigating R will become second nature! Don’t forget to check out “R Programming for Data Science” for more insights!

Conclusion

Mastering statistics using R is like learning to ride a bike—you’ll wobble and fall at first, but soon, you’ll be cruising smoothly along. Understanding statistical concepts through R not only enhances your analytical skills but also opens doors to various career opportunities.

As we’ve seen, R is not just a tool; it’s a vibrant ecosystem steeped in community support. From basic functions to advanced techniques, R empowers you to tackle real-world problems with confidence. The excitement of seeing your data come to life through analysis is unmatched.

So, keep exploring the R environment! There’s always something new to learn. Embrace your curiosity, and don’t shy away from challenges. Every error message is a stepping stone toward becoming a proficient statistician. And for those looking to expand their knowledge, consider “R in Action: Data Analysis and Graphics with R”.

Dive into the world of statistics with R, and remember, practice makes perfect! Your statistical journey is just beginning, and who knows? You might just uncover the next big trend in data analysis!

FAQs

Please let us know what you think about our content by leaving a comment down below!

Thank you for reading till here 🙂

All images from Pexels

- Scatter Plots: These are perfect for showing relationships between two variables. Here’s how to make one:

ggplot(data = my_data, aes(x = variable1, y = variable2)) +

geom_point()- Histograms: Great for visualizing the distribution of a single variable. Simple code:

ggplot(data = my_data, aes(x = variable)) +

geom_histogram(binwidth = 1)- Box Plots: Ideal for visualizing data spread and identifying outliers. Here’s a quick example:

ggplot(data = my_data, aes(x = factor(variable1), y = variable2)) +

geom_boxplot()With ggplot2, the possibilities are endless. You can create anything from straightforward to intricate plots with just a few lines of code.

Customizing Plots

Now that you have the basics down, let’s jazz up those plots! Customization is key to making your visualizations pop. Here are some tips to enhance your graphics:

- Colors: Use distinct colors to differentiate data points. You can map colors to categories using:

ggplot(data = my_data, aes(x = variable1, y = variable2, color = category)) +

geom_point()ggplot(data = my_data, aes(x = variable1, y = variable2)) +

geom_point() +

labs(title = "My Scatter Plot", x = "Variable 1", y = "Variable 2")theme_minimal() for a clean, modern look:ggplot(data = my_data, aes(x = variable1, y = variable2)) +

geom_point() +

theme_minimal()With these customization techniques, your visualizations will not only convey information but also captivate your audience. So go ahead, unleash your creativity! Visualizing data is not just about aesthetics; it’s about telling compelling stories that resonate. If you’re looking for practical insights on visualization, grab “Data Visualization: A Practical Introduction”.

ANOVA (Analysis of Variance)

ANOVA is your go-to when you want to compare means across three or more groups. Think of it as the referee in a statistical boxing match. Instead of running multiple t-tests, which could lead to a false sense of victory, ANOVA keeps everything fair and square. There are several types of ANOVA: one-way, two-way, and repeated measures, each serving its unique purpose depending on your data structure.

One-way ANOVA tests one independent variable, while two-way ANOVA considers two. Repeated measures ANOVA is the heavyweight champion, analyzing data collected from the same subjects over time. It’s ideal for studies that require tracking changes, like how people’s shoe sizes evolve after years of wearing flip-flops.

Now, let’s put ANOVA into action using R. First, you’ll need a dataset. For example, let’s say we want to analyze the effect of different diets on weight loss. Here’s how you can perform ANOVA:

# Load necessary library

library(dplyr)

# Create a sample dataset

data <- data.frame(

diet = rep(c("Diet A", "Diet B", "Diet C"), each = 10),

weight_loss = c(rnorm(10, mean = 5), rnorm(10, mean = 7), rnorm(10, mean = 6))

)

# Perform ANOVA

anova_result <- aov(weight_loss ~ diet, data = data)

summary(anova_result)After running this code, you’ll get a summary of the ANOVA results. Look for the p-value; if it’s less than 0.05, you can confidently say at least one diet led to significantly different weight loss. For a deeper dive into statistical concepts, consider “Practical Statistics for Data Scientists”.

Non-Parametric Tests

Non-parametric tests are your reliable friends when data doesn’t meet the assumptions of traditional tests. They don’t require your data to follow a specific distribution, making them handy for smaller samples or ordinal data. When should you use them? Think of situations where normality is questionable, like when your data looks more like a lopsided pancake than a bell curve.

Two popular non-parametric tests are the Wilcoxon signed-rank test and the Kruskal-Wallis test. The Wilcoxon test is great for comparing two related samples, while the Kruskal-Wallis test extends this to more than two independent groups.

Let’s say you want to compare the effectiveness of three different study techniques. Here’s how you can run the Kruskal-Wallis test in R:

# Create a sample dataset

study_data <- data.frame(

technique = rep(c("Technique A", "Technique B", "Technique C"), each = 10),

scores = c(rnorm(10, mean = 75), rnorm(10, mean = 80), rnorm(10, mean = 70))

)

# Conducting the Kruskal-Wallis test

kruskal_result <- kruskal.test(scores ~ technique, data = study_data)

kruskal_resultIf the p-value is low, it signals that at least one technique stands out. Non-parametric tests are your go-to when assumptions are fuzzy, ensuring you still get valuable insights. To learn more about these tests, check out “R Cookbook: Proven Recipes for Data Analysis, Statistics, and Graphics”.

Advanced Techniques

Logistic Regression

Logistic regression is your best buddy when dealing with binary outcomes. It helps you understand the relationship between a dependent variable and one or more independent variables. Think of it as the magic wand that predicts yes or no scenarios—like whether you’ll binge-watch another series or finally tackle that laundry pile.

To implement logistic regression in R, you can use the glm function. Here’s a quick example:

# Load necessary library

library(dplyr)

# Sample dataset

data <- data.frame(

outcome = c(0, 1, 0, 1, 1, 0, 1, 0),

predictor = c(23, 45, 35, 50, 40, 22, 34, 39)

)

# Fit logistic regression

logistic_model <- glm(outcome ~ predictor, family = binomial, data = data)

summary(logistic_model)This code will give you the odds ratios, helping you interpret how changes in predictor variables affect the likelihood of the outcome. For those diving deeper into R programming, consider “Advanced R” by Hadley Wickham.

Multivariate Analysis

Multivariate analysis of variance (MANOVA) is a powerful tool for analyzing multiple dependent variables simultaneously. It’s like hosting a party where everyone gets to mingle, instead of having each variable isolated in a corner. MANOVA helps determine if your independent variables significantly affect the combination of dependent variables.

Here’s how to conduct a MANOVA in R:

# Sample dataset

data <- data.frame(

group = rep(c("A", "B", "C"), each = 10),

var1 = rnorm(30),

var2 = rnorm(30)

)

# Conducting MANOVA

manova_result <- manova(cbind(var1, var2) ~ group, data = data)

summary(manova_result)The output will indicate whether group differences exist across the dependent variables, providing a comprehensive view of your data. For a deeper understanding, grab “Data Science for Business: What You Need to Know about Data Mining and Data-Analytic Thinking”.

Factor Analysis

Exploratory Factor Analysis (EFA) is your go-to method for uncovering latent variables. It helps reduce data complexity by identifying underlying factors that explain observed correlations. EFA is particularly useful in surveys or tests where you want to understand the dimensions of a construct.

To perform EFA in R, you can use the factanal function. Here’s a simple guide:

# Sample data

data <- matrix(rnorm(100), ncol = 5)

# Conducting EFA

efa_result <- factanal(factors = 2, covmat = cov(data))

print(efa_result)This output will provide the factor loadings, helping you understand how your original variables relate to the underlying factors. Factor analysis is the key to simplifying complex data elegantly. To delve further into data mining techniques, consider “Data Mining with R: Learning with Case Studies”.

With these techniques, you’re well-equipped to tackle diverse statistical challenges using R. Whether it’s comparing means, understanding relationships, or uncovering hidden patterns, R has the tools to make your analysis engaging and insightful.

Troubleshooting and Best Practices in R

Using R can feel like trying to tame a wild beast at times. But fear not! Every great statistician has faced their share of hiccups. Here, we’ll tackle common issues beginners encounter and offer some best practices for coding in R.

Common Issues

- Package Installation Problems: One of the first hurdles is installing packages. Sometimes R throws tantrums when it can’t find a package. To fix this, ensure your R version is up-to-date. You can install a package using the command

install.packages("package_name"). If you face issues, try restarting R and reinstalling. - Data Import Errors: Importing data can also be tricky. If your data isn’t loading, check the file path. Use the

getwd()function to confirm your working directory. Remember, R is case-sensitive! - Syntax Errors: These pesky errors often arise from missing commas or parentheses. Double-check your code for typos. R will usually highlight the problematic line, making it easier to spot mistakes.

- Memory Limitations: Working with large datasets can lead to memory issues. Use the

gc()function to clear memory. If you still struggle, consider using data.table or dplyr for efficiency. - Graphing Woes: If your plots don’t appear, ensure you’ve loaded the correct library (like ggplot2). Sometimes, a simple restart of RStudio resolves mysterious plotting issues.

Best Practices for Efficient and Readable R Code

- Comment Your Code: Always add comments to explain your thought process. This practice not only helps others understand your work but also reminds you why you wrote something a few weeks later.

- Use Meaningful Variable Names: Avoid vague names like

xordata1. Instead, opt for descriptive names likeuser_age_dataorsales_by_quarter. This clarity is golden! - Organize Your Code: Break your code into logical sections using functions. This approach enhances readability and reusability. Plus, it makes debugging a breeze!

- Avoid Repeated Code: If you find yourself repeating code, consider wrapping it in a function. This practice reduces clutter and makes your script easier to maintain.

- Use Version Control: If you’re working on larger projects, use Git for version control. This way, you can track changes and collaborate with others without losing your mind.

- Test Your Code Frequently: Don’t wait until the end to test your code. Regularly run your scripts to catch errors early. It’s much easier to fix small issues than to untangle a mess later.

Implementing these best practices will streamline your coding experience. With a little patience and practice, navigating R will become second nature! Don’t forget to check out “R Programming for Data Science” for more insights!

Conclusion

Mastering statistics using R is like learning to ride a bike—you’ll wobble and fall at first, but soon, you’ll be cruising smoothly along. Understanding statistical concepts through R not only enhances your analytical skills but also opens doors to various career opportunities.

As we’ve seen, R is not just a tool; it’s a vibrant ecosystem steeped in community support. From basic functions to advanced techniques, R empowers you to tackle real-world problems with confidence. The excitement of seeing your data come to life through analysis is unmatched.