Introduction

Welcome to the fascinating world of probability and statistics! These two fields are not just numbers and formulas; they are the backbone of decision-making in engineering and the sciences. Imagine trying to design a bridge without knowing how much weight it can handle. Scary, right? That’s where statistics strut their stuff, helping engineers and scientists make informed decisions based on data.

Probability provides a framework for quantifying uncertainty. It helps you understand the likelihood of events occurring. Whether you’re predicting weather patterns or assessing the risk of a project failing, probability plays a crucial role. On the other hand, statistics equips you with the tools to analyze data and derive meaningful insights. It transforms raw data into actionable information, which is vital for problem-solving.

Our target audience for this guide includes students, professionals, and educators in engineering and the sciences. If you aim to enhance your understanding of these subjects, you’re in the right place! And for those who want to delve deeper into practical statistics, check out Practical Statistics for Data Scientists by Peter Bruce. It’s like having a cheat sheet for the real world!

This comprehensive guide will walk you through essential concepts, starting from the basics of probability, diving into key statistical methods, and exploring real-world applications in engineering and sciences. You’ll also find insights into advanced topics, making this resource suitable for all levels of expertise.

So, buckle up! Let’s navigate through the intricate yet thrilling lanes of probability and statistics. By the end of this guide, you will not only grasp the theoretical aspects but also appreciate their practical implications, setting you on a path toward becoming a statistics whiz in your field.

If you’re looking for a detailed overview of statistical learning concepts, check out this introduction to statistical learning with Python.

Understanding Probability

What is Probability?

Probability is the measure of the likelihood that an event will occur. Think of it as a crystal ball for predicting the future, but with math instead of magic! In engineering and sciences, probability helps us make sense of uncertain outcomes. For instance, when designing a new product, engineers use probability to forecast performance based on varying conditions.

There are different interpretations of probability. The frequentist perspective defines probability as the long-term frequency of events occurring based on repeated trials. In contrast, the Bayesian interpretation incorporates prior knowledge or beliefs, adjusting probabilities as new evidence emerges. Both views provide valuable insights, depending on the context. If you’re curious to explore a deeper understanding, grab a copy of Introduction to Probability by Dimitri P. Bertsekas.

Understanding these frameworks is essential for applying probability effectively. Engineers often rely on frequentist methods for quality control, while scientists might use Bayesian approaches for data analysis in experimental research. Ultimately, both interpretations aim to quantify uncertainty, enabling better decision-making in unpredictable environments.

With a solid understanding of probability, you’ll be prepared to tackle more complex statistical concepts. So, let’s keep rolling and uncover more about key probability concepts!

Key Concepts in Probability

Sample Space and Events

In probability, the sample space is the set of all possible outcomes of a random experiment. Think of it as a menu at a restaurant; it lists everything you could possibly order. For instance, if you roll a six-sided die, the sample space is {1, 2, 3, 4, 5, 6}. Each number represents an outcome.

An event is a specific subset of the sample space. It could be rolling an even number, which would include the outcomes {2, 4, 6}. Events can be simple, containing just one outcome, or compound, including multiple outcomes. Understanding sample spaces and events is crucial for grasping more complex probability concepts later on. If you want more insights into this area, check out Probability and Statistics for Engineering and Science by Anthony J. Hayter.

Rules of Probability

Probability operates on a few fundamental rules that help us calculate the likelihood of events. The addition rule states that the probability of either event A or event B occurring is the sum of their individual probabilities, minus any overlap. For example, if you flip a coin, the probability of getting heads (0.5) or tails (0.5) is simply added together:

P(A or B) = P(A) + P(B) – P(A and B) = 0.5 + 0.5 – 0 = 1

Next, we have the multiplication rule for independent events. This rule states that the probability of both events A and B occurring is the product of their individual probabilities. Imagine rolling two dice. The probability of rolling a 4 on the first die and a 5 on the second die would be:

P(A and B) = P(A) * P(B) = (1/6) * (1/6) = 1/36

These rules form the backbone of probability calculations, ensuring we understand how different events interact.

Conditional Probability

Conditional probability is all about the likelihood of an event occurring, given that another event has already happened. It’s like asking, “What are my chances of winning the lottery, knowing I already bought a ticket?”

Bayes’ theorem is a powerful tool in this realm, allowing us to update our probabilities based on new evidence. The formula is:

P(A|B) = [P(B|A) * P(A)] / P(B)

Here, P(A|B) is the probability of event A occurring given that B is true. For instance, in medical testing, Bayes’ theorem helps determine the probability of having a disease based on a positive test result.

In practical applications, consider a quality control scenario. If a factory knows that 95% of its products pass inspection and 5% fail, but a product has been identified as failing, Bayes’ theorem allows the factory to calculate the probability that the product is indeed defective based on prior knowledge. This method is essential in decision-making processes across various fields, from engineering to healthcare. And if you want to sharpen your skills in this area, grab The Signal and the Noise by Nate Silver.

Statistics in Engineering

Descriptive Statistics

Descriptive statistics is the art of summarizing data in a way that makes it understandable. Imagine trying to explain your last vacation. Instead of listing every detail, you might mention the highlights: the best beach, the tastiest food, and the most breathtaking sunset. That’s what descriptive statistics does for data. It provides a snapshot, letting you grasp the overall picture quickly.

Descriptive statistics includes measures like mean, median, and mode. These tools help summarize data sets, making them easier to interpret. In engineering, this is crucial. Whether analyzing survey results or measuring product performance, descriptive statistics helps professionals make sense of the numbers. If you want a comprehensive guide on this, consider Statistics for Engineers and Scientists by William Navidi.

Measures of Central Tendency

Measures of central tendency include the mean, median, and mode—essentially, the three amigos of data summary!

Mean: This is the average of a data set. You calculate it by adding all values and dividing by the number of values. For example, if five engineers report their project completion times as 5, 7, 8, 6, and 4 hours, the mean is (5 + 7 + 8 + 6 + 4) / 5 = 6 hours.

Median: The median is the middle value when you arrange data in ascending order. If you have an odd number of observations, it’s straightforward; with an even number, you average the two middle values. In our previous example, if we sorted the times (4, 5, 6, 7, 8), the median is 6 hours. For more insights on median salary trends, refer to this statistics on Poland’s median salary for 2024.

Understanding the mean is essential for data analysis, and you can explore more about what it means to be identically distributed in statistics here.

Mode: The mode is the most frequently occurring value in a data set. In many engineering applications, it helps identify the most common failures or outcomes. If our engineers reported times of 5, 5, 6, 7, and 8 hours, the mode would be 5 hours.

Understanding these measures is crucial for engineers. They provide a foundation for making data-driven decisions, optimizing processes, and improving outcomes.

Measures of Dispersion

When it comes to understanding data, measures of dispersion are your best pals. They help us understand how spread out or clustered our data points are. Imagine trying to determine the reliability of a new engineering design; knowing how much variation exists in your measurements becomes crucial. Here, we’ll cover three main measures: range, variance, and standard deviation.

Range is the simplest measure of dispersion. It tells you how far apart the highest and lowest values in a data set are. Just like checking how far the farthest skyscraper is from the smallest hut, the range gives you a quick snapshot of the spread. To get the range, subtract the smallest value from the largest. For example, if your engineering test scores are 85 and 95, the range is 95 – 85 = 10. Easy peasy!

Now, onto variance, which takes a bit more effort but gives you deeper insights. Variance measures how much each number in a set differs from the mean (average). Think of it as a party where guests are mingling; variance tells you how much they spread out from the host (the mean). To calculate variance, follow these steps: first, find the mean of your data set. Next, subtract the mean from each data point, square the result, and then average those squared differences. The formula looks like this:

[ Variance (σ2) = (Σ (xi – μ)2) / N ]

where xi represents each data point, μ is the mean, and N is the number of points.

Lastly, let’s talk about standard deviation. This measure is like variance’s more relatable sibling. While variance is in squared units (which can be confusing), the standard deviation brings it back to the original units. It tells you how much the data points typically deviate from the mean. To calculate the standard deviation, simply take the square root of the variance:

[ Standard Deviation (σ) = √(σ2) ]

For instance, if your variance is 25, your standard deviation would be 5. This means, on average, your data points are about 5 units away from the mean.

In summary, measures of dispersion—range, variance, and standard deviation—are vital for interpreting data in engineering and sciences. They help us understand the consistency of our measurements and the reliability of our findings. So next time you crunch numbers, don’t forget to check out these handy measures!

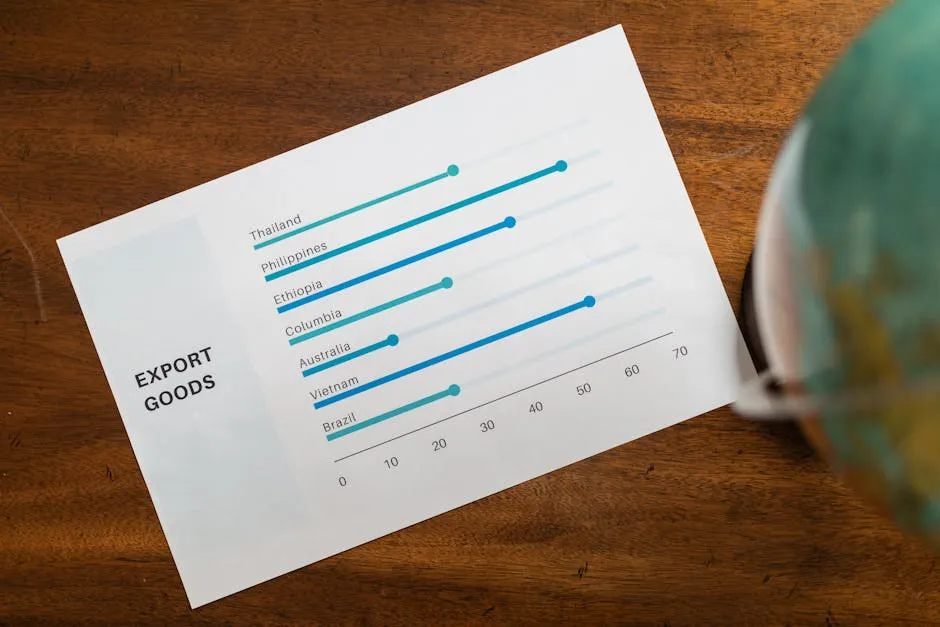

Applications in Various Fields

Probability and statistics are the unsung heroes in various engineering and scientific fields. They enable professionals to make data-driven decisions, optimize designs, and predict outcomes with a sprinkle of confidence. Let’s take a closer look at how these two fields play out in civil engineering, mechanical engineering, and environmental science.

Civil Engineering

In civil engineering, probability and statistics are vital for risk assessment and project management. When designing structures like bridges and dams, engineers use statistical methods to analyze material strengths and predict how these structures will perform under various loads and environmental conditions. For instance, when analyzing soil samples, engineers often rely on statistical sampling methods to determine the soil’s properties and ensure the ground can support the intended structure. This data-driven approach helps mitigate risks, ensuring safety and reliability. And if you want a comprehensive guide on engineering statistics, check out Engineering Statistics by Douglas C. Montgomery.

Moreover, civil engineers use regression analysis to assess the relationships between different variables, such as traffic patterns and accident rates. By understanding these relationships, they can improve road designs and implement better traffic management strategies. All in all, statistics help civil engineers build safe infrastructures that stand the test of time.

Mechanical Engineering

Mechanical engineering also benefits greatly from the application of probability and statistics. Engineers in this field often deal with complex systems that involve numerous variables and uncertainties. For example, when designing a new engine, mechanical engineers must consider various factors such as temperature, pressure, and material fatigue. They utilize statistical models to simulate different operating conditions and predict the performance of the engine.

Quality control is another area where statistics shine. By applying statistical process control (SPC), engineers can monitor manufacturing processes and detect any deviations from the norm. This proactive approach allows them to make timely adjustments, minimizing defects and ensuring quality in production. In essence, the marriage of mechanical engineering with probability and statistics leads to more reliable and efficient machines. To enhance your understanding of these concepts, consider reading Statistics for Business and Economics by Paul Newbold.

Environmental Science

In environmental science, probability and statistics are indispensable for understanding and addressing complex issues such as climate change and pollution. Environmental scientists use statistical models to analyze data from various sources, including weather patterns, pollutant levels, and wildlife populations. By applying these models, they can identify trends, make predictions, and inform policy decisions.

Take climate modeling, for instance. Scientists use statistical techniques to analyze historical climate data and predict future climate scenarios. This information is crucial for developing strategies to combat climate change and protect ecosystems. Additionally, environmental statisticians often conduct risk assessments to evaluate the potential impacts of pollutants on human health and the environment. By quantifying these risks, they provide invaluable insights that guide regulatory decisions and public health initiatives. For a deeper dive into this field, check out The Art of Statistics: Learning from Data by David Spiegelhalter.

In conclusion, the applications of probability and statistics are vast and varied across civil engineering, mechanical engineering, and environmental science. These disciplines rely on data and statistical analyses to make informed decisions, enhance safety, and address pressing global issues. The next time you marvel at a bridge, engine, or a cleaner environment, remember the vital role that probability and statistics played in making it all possible.

Use of Software in Statistics

In today’s tech-savvy world, statistical software tools like R, Python, and MATLAB have become essential for engineers and scientists. These tools not only simplify complex calculations but also enhance data visualization and modeling capabilities. Let’s explore some of the most popular software used in the realm of statistics.

R is a powerful open-source programming language specifically designed for statistical analysis and data visualization. With numerous packages available, R provides a broad range of functions for everything from basic statistical tests to advanced modeling techniques. Its user-friendly nature allows engineers to perform complex analyses without getting lost in a sea of code. Plus, the ability to create stunning visualizations transforms raw data into compelling stories. If you’re interested in learning more about R, check out R for Data Science by Hadley Wickham.

Python, on the other hand, has gained immense popularity due to its versatility and ease of use. With libraries like NumPy, Pandas, and SciPy, Python provides robust tools for statistical analysis and data manipulation. Engineers can leverage Python’s capabilities to build custom algorithms, automate repetitive tasks, and integrate statistical methods into larger engineering workflows. It’s like having a Swiss Army knife for data! If you’re looking to master Python for data analysis, consider Python for Data Analysis by Wes McKinney.

MATLAB is another widely used software, particularly in engineering fields. Known for its matrix calculations and data visualization tools, MATLAB allows engineers to analyze large datasets and perform complex mathematical computations with ease. Its user-friendly interface makes it accessible for those who may not have a strong programming background. Additionally, MATLAB provides specialized toolboxes for specific applications, such as signal processing and control systems, further enhancing its utility in engineering. If you want to explore MATLAB, check out MATLAB for Engineers by Holly Moore.

These software tools not only streamline the process of data analysis but also foster collaboration among engineers and scientists. By using these platforms, professionals can share their findings, replicate analyses, and build upon each other’s work, leading to more significant advancements in their respective fields.

How These Tools Facilitate Data Analysis and Modeling

Statistical software tools have revolutionized the way engineers and scientists handle data analysis and modeling. They make complex tasks manageable, turning what could be a daunting process into a more straightforward one. Let’s look at how these tools facilitate data analysis and modeling.

Firstly, statistical software allows for efficient data management. Instead of sifting through mountains of spreadsheets or paper records, engineers can import datasets directly into the software. This capability not only saves time but also reduces the likelihood of errors associated with manual data entry. Once the data is in the system, users can easily clean, manipulate, and explore it, ensuring that they’re working with accurate and relevant information.

Secondly, these tools provide a vast array of statistical functions and models. Engineers can perform descriptive statistics, inferential statistics, and regression analyses with just a few clicks. Need to run a hypothesis test? Piece of cake! Want to create a predictive model? Just a few lines of code! The accessibility of these functions empowers professionals to conduct rigorous analyses without needing an extensive background in statistics.

Visualization is another significant advantage. Statistical software enables users to create informative graphs, charts, and plots that help illustrate findings and communicate results effectively. Imagine trying to explain a complex dataset without visuals—yikes! With software tools, professionals can present their analyses in a visually appealing manner, making it easier for stakeholders to understand the implications of their data.

Moreover, many statistical software packages support advanced modeling techniques, such as machine learning and simulations. By utilizing these methods, engineers can uncover patterns and insights that traditional analyses might miss. For instance, a mechanical engineer could use a machine learning algorithm to predict equipment failures based on historical performance data, allowing for proactive maintenance and reduced downtime. If you want to dive into machine learning, consider Machine Learning: A Probabilistic Perspective by Kevin P. Murphy.

Lastly, collaboration is a breeze with statistical software. Many platforms allow users to save and share their projects, enabling teams to work together seamlessly. This feature fosters knowledge sharing and encourages interdisciplinary collaboration, leading to innovative solutions to complex problems.

In summary, statistical software tools have transformed how engineers and scientists approach data analysis and modeling. By streamlining data management, providing a wide range of functions, enhancing visualization, and promoting collaboration, these tools empower professionals to make informed decisions based on solid evidence.

Advanced Topics in Probability and Statistics

Regression Analysis

Regression analysis is a cornerstone of statistical modeling in engineering and the sciences. At its core, regression is about understanding relationships between variables. It allows us to predict one variable’s value based on the value of another. Think of it as a crystal ball, but instead of magic, we rely on data and mathematical equations.

The significance of regression analysis in engineering cannot be overstated. It helps engineers assess how different factors influence outcomes, enabling them to make better decisions. For instance, in civil engineering, regression can be used to predict the load-bearing capacity of materials based on various stress tests. In mechanical engineering, it can help understand how changes in temperature affect material properties. Ultimately, regression analysis provides a framework for making predictions that can be validated through real-world observations, making it a vital tool for engineers and scientists alike. If you want to explore this further, consider Statistical Methods for Machine Learning by David Barber.

Simple vs. Multiple Regression

Regression analysis is a powerful statistical tool for understanding relationships between variables. But not all regression is created equal! Let’s break down the difference between simple and multiple regression, and when to use each.

Simple regression involves two variables: one independent variable (predictor) and one dependent variable (outcome). Think of it as a straight line that best fits your data points. For example, if you wanted to predict the fuel efficiency of a car based on its weight, you would use simple regression. The formula? It’s as simple as Y = a + bX, where Y is the predicted value, a is the intercept, b is the slope, and X is the weight of the car. This method works best when you suspect a direct, linear relationship between two variables.

Multiple regression, on the other hand, takes things up a notch! It allows you to analyze the relationship between one dependent variable and multiple independent variables. Picture this: you’re trying to predict a student’s GPA based not only on their study hours but also on their attendance and participation in extracurricular activities. Here, multiple regression kicks in. The formula expands to Y = a + b1X1 + b2X2 + b3X3 + …, where each bi represents the effect of each predictor on the outcome.

When should you choose one over the other? Use simple regression when you have a clear, straightforward relationship. If you suspect that multiple factors affect your outcome, then multiple regression is your best bet. It helps you understand how several predictors interact, bringing a richer insight into your data.

In summary, simple regression is great for direct relationships, while multiple regression shines in complex scenarios with multiple influences. Understanding these differences is key to making informed decisions in your engineering and scientific endeavors!

Importance of Correlation Coefficients

Correlation coefficients are the unsung heroes of statistics. They help us understand the strength and direction of relationships between variables. Think of them as the matchmakers of the statistical world!

A correlation coefficient ranges from -1 to 1. A coefficient of 1 indicates a perfect positive relationship, meaning as one variable increases, so does the other. Conversely, -1 indicates a perfect negative relationship, where one variable increases while the other decreases. A coefficient of 0 suggests no relationship at all.

But why do these coefficients matter? They provide a quick way to gauge how closely two variables are related. For instance, in engineering, if you’re studying the relationship between material strength and temperature, a high positive correlation might indicate that stronger materials perform better at higher temperatures. This insight can guide your design choices, enhancing safety and performance. And for a deeper understanding, consider Statistical Inference by George Casella.

Moreover, correlation coefficients are crucial for validating your assumptions before jumping into regression analysis. If your variables show no correlation, it might not make sense to model them together.

In summary, correlation coefficients are vital for understanding relationships in data. They guide decision-making, ensure meaningful analyses, and help you avoid statistical pitfalls. So next time you crunch some numbers, don’t forget to check those correlation coefficients!

Analysis of Variance (ANOVA)

ANOVA, or Analysis of Variance, is a statistical method useful for comparing multiple groups. Instead of checking if two means are different, ANOVA allows you to assess whether the means of three or more groups differ significantly. Think of it as a referee in a match between different teams, ensuring fairness in measurements!

The basic idea behind ANOVA is to partition the total variance observed in the data into components related to group differences and random error. If there’s a significant difference among the means, it suggests that at least one group is different from the others. This method is particularly handy in engineering when you want to evaluate the performance of different materials or processes under similar conditions. If you want a comprehensive guide on ANOVA, grab The Design of Experiments by Ronald A. Fisher.

ANOVA works by analyzing the variance within each group and between the groups. If the variance between the group means is greater than the variance within the groups, it indicates that the group means are not all equal. The result is presented as an F-statistic, which you can compare against a critical value from the F-distribution to determine significance.

Applications of ANOVA are numerous, ranging from quality control in manufacturing to testing the effectiveness of different teaching methods in educational research. In engineering, it helps determine whether different materials or designs yield statistically different results, ensuring that the best option is selected.

Examples of ANOVA in Engineering Contexts

In engineering, ANOVA plays a pivotal role in decision-making processes. Here are a couple of practical examples where this technique shines.

1. Material Testing: Imagine an aerospace engineer trying to determine which composite material performs best under stress. They could design an experiment testing three different materials, applying the same stress to each, and then measuring the failure points. By applying ANOVA, the engineer can statistically determine if one material consistently outperforms the others, leading to safer and more efficient aircraft designs.

2. Process Control: In a manufacturing setting, engineers may want to compare the output quality of products made using different machines or settings. By running a series of tests and collecting data on product quality across various setups, ANOVA helps determine if changes in the machine settings or types lead to statistically significant differences in product quality. This insight allows for optimized production processes, reducing waste and improving overall quality.

3. Experimental Design: In environmental engineering, researchers might evaluate the effectiveness of various pollution control methods. With ANOVA, they can analyze data from multiple sites employing different methods to ascertain which approach yields the best results. This helps policymakers and engineers make informed decisions about resource allocation and implementation strategies.

In summary, ANOVA is a powerful tool in engineering that aids in comparing multiple groups and making data-driven decisions. From material selection to process optimization, this statistical method ensures that engineers choose the best options based on solid evidence.

FAQs

What is the difference between probability and statistics?

Probability and statistics, while closely related, serve different purposes. Probability deals with predicting the likelihood of future events based on a theoretical model. It’s like forecasting the weather: with probability, you estimate the chance of rain tomorrow. Statistics, on the other hand, involves analyzing collected data to make inferences about populations or processes. It’s akin to reviewing last month’s weather to evaluate how often it rained.

Please let us know what you think about our content by leaving a comment down below!

Thank you for reading till here 🙂

All images from Pexels