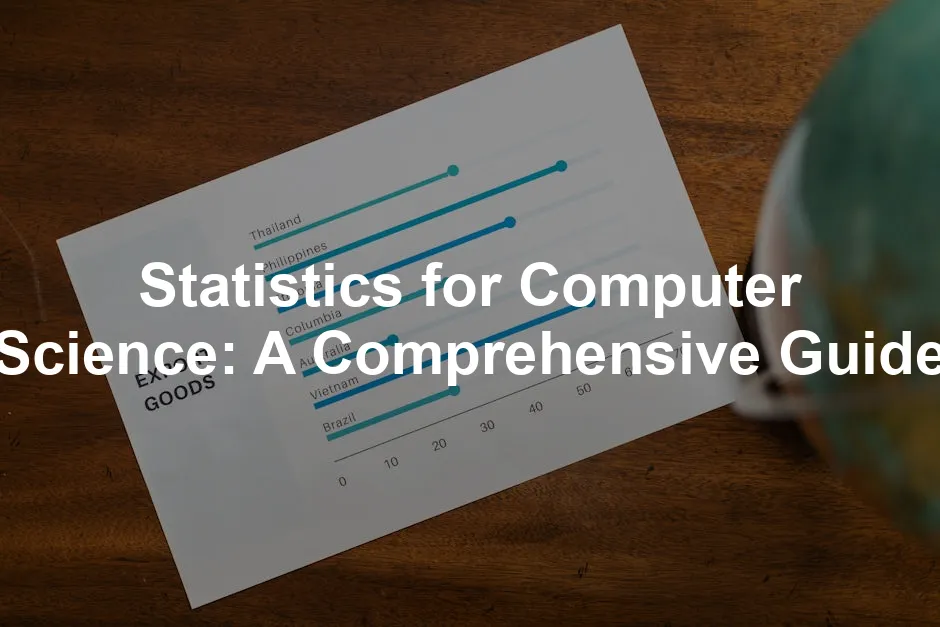

Introduction

Statistics is the backbone of computer science. Why? Well, it helps us make sense of the overwhelming amount of data swirling around us. In a world driven by data, understanding statistics is like having a map in a maze. It guides us through the twists and turns of data analysis, machine learning, and algorithm development.

Imagine being a computer scientist without the ability to analyze data. It would be like trying to bake a cake without knowing how to measure ingredients. Statistics provides the tools to summarize data, identify trends, and make predictions. Whether you’re a student, a seasoned professional, or an enthusiastic hobbyist, grasping statistical concepts is crucial.

Consider this: machine learning, one of the hottest topics in tech, relies heavily on statistical methods. From predicting user behavior to detecting fraud, statistics is behind the magic. Algorithms use statistical principles to learn from data and improve over time. Without a solid understanding of statistics, navigating the ever-evolving landscape of computer science becomes daunting.

This guide aims to illuminate the significance of statistics in computer science. We will dive into the basics, key concepts, and applications. So, buckle up! Whether you’re aiming for a career in data science or just curious about the field, understanding statistics is your first step toward success. If you’re looking for a comprehensive introduction, consider picking up Statistics for Dummies.

Understanding the Basics of Statistics

What is Statistics?

Statistics is the science of collecting, analyzing, interpreting, and presenting data. It can be divided into two main branches: descriptive and inferential statistics.

Descriptive statistics focuses on summarizing and organizing data. Think of it as the trailer of a movie, giving you a sneak peek without revealing the whole story. It includes measures of central tendency like the mean, median, and mode. These metrics help us understand the average or common values in a dataset. Additionally, descriptive statistics involve measures of variability, such as variance and standard deviation, which tell us how spread out the data points are. For a deeper understanding of these concepts, check out The Art of Statistics: Learning from Data.

On the other hand, inferential statistics takes us a step further. It allows us to make predictions or generalizations about a population based on a sample. This is where the magic happens! By using tools like hypothesis testing and confidence intervals, we can draw conclusions that extend beyond the data we have at hand. Imagine being able to predict future events based on past trends—it’s like having a crystal ball!

Statistics plays a pivotal role in data analysis. It transforms raw data into meaningful insights. For instance, when analyzing customer behavior, statistics helps summarize preferences, identify trends, and support decision-making. Without it, data is just numbers—uninterpreted and unhelpful.

Understanding these basic concepts is essential for anyone venturing into the computer science realm. Statistics provides the foundation for more complex analyses and applications. Whether you’re developing algorithms, working on machine learning, or simply trying to make sense of data, a solid grasp of statistics will serve you well. So, let’s embrace the numbers and unlock the power of statistics in the exciting world of computer science!

Key Statistical Concepts

Descriptive Statistics

Descriptive statistics help summarize data effectively. Think of it as the intro to a great novel. You need to know the characters before diving into the plot! It includes measures like mean, median, mode, variance, and standard deviation.

- Mean: The average of your data. It’s like the final score in a game—everyone loves a good average!

- Median: The middle number when sorted. Perfect for when your data likes to play favorites.

- Mode: The value that appears most often. Like that one song you can’t escape on the radio!

- Variance: Measures how far your data points are from the mean. It’s a bit like measuring the distance between your couch and the fridge—too much variance means a long snack run.

- Standard Deviation: The square root of variance. It tells you how spread out your data is. Less spread means less chaos!

Inferential Statistics

Inferential statistics allows us to make predictions about a larger population based on a smaller sample. It’s like trying to guess the flavor of a mystery ice cream based on one lick. Key tools include:

- Hypothesis Testing: It helps us decide whether to accept or reject a hypothesis. Think of it as a courtroom drama where the evidence can tip the scales.

- Confidence Intervals: These provide a range of values where we believe the true population parameter lies. It’s like saying, “I’m pretty sure my favorite pizza place is within a 5-mile radius!”

- P-values: A p-value helps us determine the significance of our results. A low p-value (typically ≤ 0.05) indicates strong evidence against the null hypothesis, while a high p-value suggests the opposite. It’s like a judge deciding whether a case is worth pursuing!

Importance of Statistics in Computer Science

Computer scientists, listen up! Grasping statistical methods is crucial. Why? Because predictive modeling relies heavily on statistics. Picture a crystal ball that doesn’t just predict the future but backs it up with numbers.

Statistics guide us in making informed decisions. They help us understand patterns in data. For example, when developing algorithms, knowing how to analyze data trends can lead to more accurate predictions. To enhance your understanding, consider picking up R Programming for Data Science.

Consider a recommendation system. It uses statistical models to predict what you might like based on your past choices. Without statistics, that system might as well be throwing darts in the dark!

In short, a solid understanding of statistics empowers computer scientists to make data-driven decisions, enhancing their work’s accuracy and effectiveness. So, embrace those numbers! They’re not just digits; they’re the keys to unlocking future innovations. the problem with inferential statistics

Inferential Statistics

Hypothesis testing is a cornerstone of inferential statistics. It helps us evaluate the effectiveness of algorithms by comparing their performance against a null hypothesis. For example, if we develop a new sorting algorithm, we might test whether it performs significantly faster than an established one.

By using test statistics and p-values, we can determine if our results are due to chance. A p-value below a predetermined threshold (often 0.05) indicates strong evidence against the null hypothesis. This method is essential in algorithm performance evaluation, allowing us to make informed decisions about which algorithms to deploy.

Confidence intervals are equally important in predictive analytics. They provide a range within which we expect the true parameter to lie. For instance, if we forecast sales for the next quarter, a confidence interval gives us an estimate of uncertainty. This helps businesses prepare for various scenarios, enhancing decision-making. Understanding confidence intervals can transform vague predictions into actionable insights. To master these concepts, you could read Python for Data Analysis.

Regression Analysis

Regression analysis is the statistical method for understanding relationships between variables. Linear regression is the simplest form. It models the relationship between a dependent variable and one or more independent variables. For example, a company might use linear regression to predict future sales based on advertising expenditure.

Logistic regression, on the other hand, is used for binary outcomes. Imagine a bank wanting to predict whether a loan applicant will default. By using logistic regression, they can estimate the probability of default based on factors like income and credit score. This technique is crucial in various machine learning applications, from marketing to healthcare.

In real-world scenarios, regression models shine. Take a retail business: they can predict sales for the upcoming holiday season using historical data. Similarly, predictive models help in areas like stock market forecasting and customer behavior analysis. These examples showcase the versatility of regression analysis in making data-driven decisions. If you’re interested in diving deeper into data science, check out Machine Learning: A Probabilistic Perspective.

Statistical Distributions

Statistical distributions are fundamental in computer science. Key distributions include the normal, binomial, and Poisson distributions. The normal distribution is often referred to as the “bell curve.” It’s crucial for many statistical methods, including hypothesis testing and regression analysis.

The binomial distribution is useful for modeling scenarios with two outcomes, like success or failure. For instance, it can be applied in quality control to determine the likelihood of defective items in a batch.

Poisson distribution, on the other hand, is perfect for modeling events occurring within a fixed interval. Imagine analyzing the number of emails received per hour at a help desk. Understanding these distributions is vital for accurate modeling and simulations. They provide the framework for drawing meaningful conclusions from data, ultimately enhancing the effectiveness of algorithms and machine learning models. To keep track of your projects, consider using a Data Science Notebook.

Statistical Software Tools

Popular Statistical Software

In the world of data analysis, software is king. Various tools and programming languages help us wrangle statistics into submission. Let’s take a closer look at three heavyweights: R, Python, and SAS.

R

R is the rockstar of statistical analysis. It’s open-source and has a vast library of packages. Whether you need to perform regression analysis or create stunning visualizations, R has you covered. Its user community is active and supportive, making it easy to find resources or troubleshoot problems. However, R can have a steeper learning curve for beginners. It’s like learning to ride a unicycle; once you get it, you’re the life of the party!

Python

Python is the versatile sibling, loved for its simplicity and readability. With libraries like Pandas, NumPy, and SciPy, it’s a go-to for data manipulation and analysis. Plus, Python excels in machine learning with libraries like TensorFlow and scikit-learn. Its flexibility makes it perfect for integrating with web applications and data pipelines. That said, it might not have as many dedicated statistical packages as R. But hey, who doesn’t love a multitasker? You can sharpen your Python skills with an Online Course on Python Programming.

SAS

SAS, short for Statistical Analysis System, is a powerhouse in the corporate world. It’s user-friendly and offers robust data analytics capabilities. Companies often choose SAS for its strong support system and extensive documentation. On the flip side, it can be pricey, which might not sit well with budget-conscious students or small startups. Think of SAS as the fancy restaurant; it’s great for special occasions but not for everyday meals.

In summary, the choice of software largely depends on your needs and preferences. R shines in statistics and visualization, Python in versatility and integration, and SAS in corporate reliability. Each software has its strengths and weaknesses, so pick one that suits your data analysis journey. To back up your data effectively, consider getting a Portable External Hard Drive for Data Backup.

Importance of Software in Statistical Analysis

Software is not just a tool; it’s the engine that drives statistical analysis. With the right software, you can enhance the efficiency and accuracy of your data exploration.

Efficiency

Statistical software automates complex calculations. Imagine crunching numbers by hand—yikes! With software, you can handle large datasets in no time. This efficiency allows analysts to focus on interpreting results rather than getting bogged down in tedious calculations.

Accuracy

Humans make mistakes, especially when dealing with heaps of data. Statistical software minimizes errors by using precise algorithms. This accuracy is crucial in fields like healthcare, finance, and research, where the stakes are high. It’s like having a GPS; it guides you along the right path, reducing the chances of getting lost. And for those long study sessions, a Comfortable Office Chair for Long Study Sessions can make all the difference!

Furthermore, software often includes visualization tools. These tools help you present data clearly and effectively. Visualizations can reveal trends and patterns that might go unnoticed in raw data. After all, a picture is worth a thousand words!

In essence, statistical software is invaluable. It amplifies productivity and ensures more accurate analyses, making it an essential ally for any data enthusiast. So, embrace the power of software and let it elevate your statistical analysis game!

Real-World Applications of Statistics in Computer Science

Case Studies

Statistics isn’t just a bunch of numbers; it’s a powerful tool that fuels innovation across various fields. Let’s look at some case studies that illustrate how statistical methods are applied in real-world scenarios.

Data Analysis

Take the world of retail, for example. Companies analyze customer purchasing data to identify trends and patterns. By using statistical techniques like regression analysis and clustering, they can segment customers based on buying behavior. For instance, a grocery store might discover that customers who buy organic products are also more likely to purchase gluten-free items. This insight allows them to tailor marketing strategies, boosting sales and customer satisfaction. If you’re interested in data visualization, check out a Data Visualization Book for more insights.

Algorithm Performance

Now, let’s venture into the realm of algorithms. Evaluating their performance is crucial for optimizing results. Consider a tech company that develops a new recommendation algorithm. They conduct statistical testing to compare its performance against a previous model. By employing hypothesis testing, the team can confidently determine whether the new algorithm significantly improves user engagement. This process ensures that only the best-performing algorithms make it to production, enhancing user experience overall.

Machine Learning

Machine learning thrives on statistics. To build and validate predictive models, data scientists utilize statistical methods at every stage. Imagine a healthcare startup that creates a predictive model to identify patients at risk of diabetes. They collect vast amounts of data, such as age, BMI, and family history, and apply logistic regression to analyze the relationships. By validating their model on a separate dataset, they ensure its reliability before deploying it in real-world applications. This process not only saves lives but also demonstrates the importance of statistics in healthcare advancements. And for those looking to dive deeper, a Practical Statistics for Data Scientists book can be an invaluable resource!

Interdisciplinary Applications

Statistics also shines in interdisciplinary fields, bridging gaps between various domains. In bioinformatics, for instance, statistical methods are essential for analyzing biological data. Researchers use statistical models to interpret gene expression data, helping to identify potential biomarkers for diseases. This integration of statistics and biology accelerates medical research and enhances our understanding of complex biological systems.

In computational biology, statistics assist in modeling and simulating biological processes. For example, scientists use statistical techniques to analyze DNA sequences, identifying mutations that may lead to genetic disorders. By applying statistical inference, they can make predictions about how these mutations affect health outcomes.

Artificial intelligence (AI) is another area where statistics plays a pivotal role. Machine learning algorithms heavily rely on statistical principles to learn patterns from data. For example, in natural language processing (NLP), algorithms analyze text data using statistical models to understand context and meaning. This statistical foundation allows AI systems to improve their language understanding and generation capabilities, transforming how we interact with technology. If you’re keen on the latest trends, a subscription to a Tech Magazine can keep you informed!

The Future of Statistics in Computer Science

As we gaze into the crystal ball of technology, the future of statistics in computer science looks bright. Emerging trends indicate a growing reliance on big data and computational statistics. With the explosion of data generated daily, the need for effective statistical methods to analyze this data is more critical than ever.

One significant trend is the rise of advanced machine learning techniques, such as deep learning. These methods rely on vast amounts of data and sophisticated statistical algorithms to make accurate predictions. As these techniques evolve, so too will the statistical methods that support them.

Moreover, the integration of statistics with big data technologies is transforming industries. Organizations are increasingly utilizing tools that enable real-time data analysis, allowing for quick decision-making based on statistical insights. The importance of computational statistics will continue to grow as businesses strive to harness the power of data in a rapidly changing landscape.

For those considering a career in this field, opportunities abound. Statistics and computer science skills can lead to roles in data science, machine learning engineering, and more. Continuous learning will be essential to keep pace with advancements in technology. Online courses, workshops, and industry conferences are excellent ways to stay updated on the latest developments. To get started, check out a Course on Data Science.

In conclusion, the future of statistics in computer science is dynamic and promising. Embracing new technologies and methodologies will empower professionals to tackle complex challenges and make data-driven decisions. As we navigate this exciting landscape, the fusion of statistics and computer science will undoubtedly shape the future of innovation.

Please let us know what you think about our content by leaving a comment down below!

Thank you for reading till here 🙂

All images from Pexels