Introduction

Have you ever wondered how machines predict outcomes? Logistic regression is a key player in that world. This statistical model helps classify data into categories. It’s widely used in data analytics, machine learning, and predictive modeling. Understanding logistic regression can open doors to various applications in your projects. This article aims to clarify logistic regression, its uses, and important considerations.

If you’re looking to dive deeper into statistical learning, consider grabbing a copy of An Introduction to Statistical Learning: with Applications in R. It’s a fantastic resource for anyone wanting to master the art of statistical learning.

Summary and Overview

Logistic regression predicts binary outcomes. In simpler terms, it estimates the chances of an event happening or not. The logistic function, which transforms probabilities, is crucial to this method. It allows us to express odds in a meaningful way. Many industries, like healthcare, finance, and marketing, rely on logistic regression for decision-making. There are different types of logistic regression: binary, multinomial, and ordinal. Each serves unique purposes. It’s essential to grasp the model’s assumptions and how to interpret results accurately.

If you’re new to Python and want to analyze data effectively, check out Python for Data Analysis: Data Wrangling with Pandas, NumPy, and IPython. It’s a great book that will help you wrangle data like a pro!

Logistic regression is a foundational concept in healthcare applications, where it aids in decision-making.

What is Logistic Regression?

Logistic regression is a classification algorithm. It predicts outcomes for scenarios with two possible results, like yes/no or 0/1. Unlike linear regression, logistic regression is tailored for binary outcomes. The dependent variable here is dichotomous. This means it can only take two values.

The logistic function models probabilities, ensuring outputs remain between 0 and 1. When we apply logistic regression, we’re primarily focused on finding the relationship between independent variables and the likelihood of a certain outcome.

Key points to note:

- Logistic regression relates to regression analysis.

- Common examples of binary outcomes include pass/fail, spam/not spam, or win/lose.

- The logistic function is mathematically represented as:

P(Y=1) = \frac{1}{1 + e^{-(\beta_0 + \beta_1X_1 + … + \beta_nX_n)}}

Here, P(Y=1) denotes the probability of the event occurring, while X represents independent variables.

Understanding logistic regression is foundational for anyone interested in data analysis or machine learning. By grasping these concepts, you can better apply them to real-world problems. If you’re looking for a comprehensive guide, check out The Elements of Statistical Learning: Data Mining, Inference, and Prediction. It’s packed with insights and is a must-read for data enthusiasts!

Types of Logistic Regression

Logistic regression comes in several flavors. Each type serves different scenarios based on the nature of the dependent variable. Let’s break down the three main types.

Binary Logistic Regression

This is the most common form. In binary logistic regression, the dependent variable has two possible outcomes. Think of it as a yes/no or true/false situation. A classic example is predicting whether a student will pass or fail an exam. Here, the model estimates the probability that a student passes based on independent variables like study hours and attendance. Other examples include determining if an email is spam or if a loan applicant is a risk.

Multinomial Logistic Regression

Next up is multinomial logistic regression. This type is used when the dependent variable has three or more outcomes that aren’t ordered. For instance, imagine you want to predict a person’s favorite type of transportation: car, bus, or bike. Each option is distinct and does not follow a ranking. This model evaluates how various factors, such as age and location, influence a person’s choice among multiple categories.

Ordinal Logistic Regression

Lastly, we have ordinal logistic regression. This type is suitable when the dependent variable has three or more outcomes that follow a specific order. A good example is survey responses, like rating a service from poor to excellent. The model captures the relationship between the ordered responses and independent variables, like age or customer satisfaction levels. Unlike multinomial regression, the order of outcomes matters here.

Understanding these types is crucial for choosing the right model based on the nature of your data. Each type has unique application contexts, from healthcare predictions to customer behavior analysis. By knowing when to use each, you can ensure more accurate and meaningful results in your analyses. If you’re curious about deepening your knowledge further, grab Deep Learning by Ian Goodfellow. It’s a great read for understanding advanced concepts in machine learning!

Assumptions of Logistic Regression

Logistic regression is a powerful tool, but it comes with key assumptions that must be met for reliable results. Understanding these assumptions is critical to maintaining model integrity.

First, the dependent variable must be binary. This means it can only take two values, such as 0 or 1. If you try to apply logistic regression to a continuous outcome, your results may be misleading.

Next, observations need to be independent. Each data point should not influence another. Imagine trying to predict whether a patient has a disease based on sample data. If patients share information or experiences, the independence assumption is violated, leading to unreliable predictions.

Finally, there should be a linear relationship between independent variables and the log odds. This means that changes in predictors should correlate with changes in the log odds of the dependent variable. If this assumption is not met, your model might not fit well, leading to inaccurate predictions.

By ensuring these assumptions hold true, you enhance the reliability of your logistic regression model. Neglecting them can result in flawed analyses and misguided conclusions. If you’re looking for a practical guide to understanding these concepts, consider Practical Statistics for Data Scientists: 50 Essential Concepts. It’s an essential read for anyone working with data!

Interpreting Logistic Regression Results

Interpreting results from logistic regression can initially seem tricky. However, once you grasp the concepts of coefficients and odds ratios, it becomes much clearer.

The coefficients in logistic regression represent the change in the log odds of the outcome for a one-unit change in the predictor variable. To make this more understandable, we often convert these coefficients into odds ratios. The odds ratio (OR) tells us how much the odds of the outcome increase or decrease with a unit change in the predictor.

An odds ratio greater than one indicates an increase in the odds of the event occurring. For instance, if the OR is 1.5, the odds of the outcome increase by 50% for every one-unit increase in the predictor. Conversely, an odds ratio less than one suggests a decrease in odds. If the OR is 0.5, the odds of the outcome decrease by 50%.

Understanding the difference between log odds and probability is crucial. Log odds can be difficult to interpret directly, while probability is more intuitive. Remember, logistic regression outputs probabilities that fall between 0 and 1. You can convert log odds to probability using the formula:

P = \frac{e^{\text{log odds}}}{1 + e^{\text{log odds}}}

This transformation helps in understanding the likelihood of an event occurring based on predictor variables.

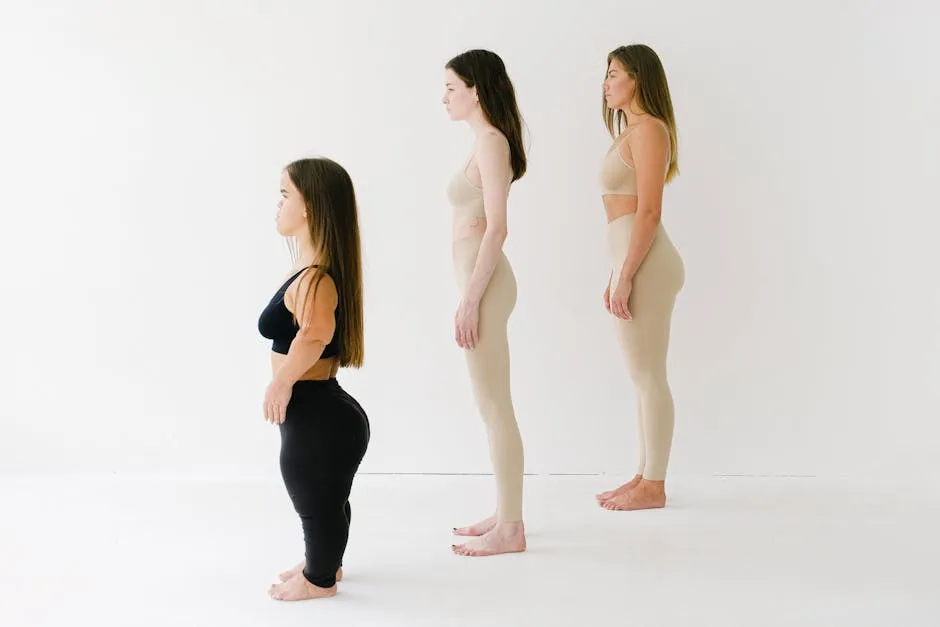

Let’s consider a practical example. Suppose we analyze a dataset predicting whether a patient has diabetes based on factors like age and BMI. If the coefficient for BMI is 0.4, the odds ratio becomes:

OR = e^{0.4} \approx 1.49

This means for each unit increase in BMI, the odds of having diabetes increase by about 49%.

To carry out this analysis effectively, many people turn to software tools like R or Python. In R, you can use the glm() function to fit a logistic regression model. After fitting the model, use the summary() function to view coefficients and their significance. In Python, the LogisticRegression class from the sklearn library offers similar functionality. For those just starting out, Data Science from Scratch: First Principles with Python is an excellent starting point!

Logistic Regression vs. Other Models

When comparing logistic regression to other statistical models, notable differences arise. Logistic regression is specifically designed for binary outcomes, making it suitable for classification tasks. In contrast, linear regression is intended for continuous outcomes. Using linear regression on binary data can yield misleading results since it may predict probabilities outside the 0-1 range.

Other classification models, such as decision trees and support vector machines (SVM), also serve distinct purposes. Decision trees are intuitive and easy to interpret but can overfit with complex datasets. SVMs, on the other hand, are powerful for high-dimensional data but require careful tuning.

Logistic regression shines in situations where the relationship between predictors and the outcome is approximately linear. Additionally, it performs well with smaller datasets, where its simplicity and interpretability become advantageous.

However, logistic regression has limitations. It assumes that the relationship between the independent variables and the log odds is linear. If this assumption is violated, the predictions may be unreliable. Other models might better capture complex relationships in the data. For a more in-depth understanding of machine learning, check out Machine Learning Yearning by Andrew Ng.

In summary, the choice of model should consider the specific characteristics of your data and the problem at hand. Logistic regression is an excellent starting point for binary classification problems, but exploring other models may provide better results depending on your dataset’s complexity.

Applications of Logistic Regression

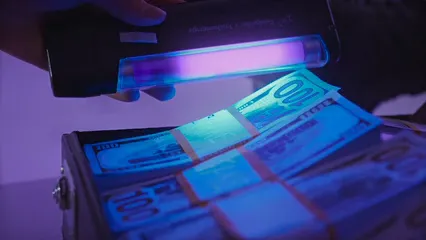

Logistic regression finds its place across various industries, proving its versatility and effectiveness. One prominent area is fraud detection. Businesses utilize logistic regression to analyze transaction patterns. For instance, banks implement this model to identify fraudulent credit card transactions. By examining historical data, they can flag unusual activities, alerting them to potential fraud. This proactive approach protects customers and reduces financial losses.

In the healthcare sector, logistic regression plays a vital role in medical diagnostics. It helps predict disease likelihood based on patient data. For example, researchers often use it to assess the risk of diabetes. By analyzing factors like age, weight, and family history, healthcare providers can identify individuals at higher risk. This enables early intervention and personalized care plans. For more on this, explore best statistical techniques from university of minnesota for healthcare applications.

Logistic regression is essential in identifying independent variables that influence health outcomes.

Another significant application is in customer retention. Companies aim to understand why customers leave. Using logistic regression, they analyze customer behavior and demographics. A practical example includes a subscription service predicting churn rates. By identifying key factors, businesses can implement targeted strategies to retain customers, ultimately boosting loyalty and revenue.

Real-world case studies further illustrate the impact of logistic regression. A notable example is First Tennessee Bank, which improved profitability through predictive analytics. By leveraging logistic regression, they achieved a 600% increase in cross-sale campaigns. This success demonstrates the model’s statistical significance and its ability to influence business outcomes positively.

Looking ahead, the future of logistic regression applications appears promising. As data continues to grow, businesses will increasingly rely on models like logistic regression for insights. With advancements in machine learning and AI, logistic regression will integrate more seamlessly into predictive analytics tools, enhancing its capabilities. This evolution could lead to even more innovative applications across various sectors, from finance to healthcare. If you’re interested in exploring more about data mining, consider Data Mining: Concepts and Techniques. It’s a fundamental read for understanding the intricacies of data mining!

Key Takeaways

– Logistic regression is essential in fraud detection, healthcare, and customer retention.

– Real-world examples highlight its effectiveness and business impact.

– Future trends suggest growing integration with advanced analytics and machine learning.

Challenges and Limitations

Despite its strengths, logistic regression faces challenges. One common issue is overfitting. This occurs when the model learns the training data too well, failing to generalize to new data. As a result, predictions can be inaccurate. To combat this, regularization techniques can be employed. These methods add a penalty to the model, discouraging excessive complexity.

Another concern is multicollinearity. This happens when independent variables are highly correlated. It can distort the model’s coefficients, making interpretation difficult. To mitigate this, one strategy is to remove or combine correlated variables. This simplifies the model and enhances clarity.

Additionally, if model assumptions are violated, consequences can arise. For instance, logistic regression assumes a linear relationship between independent variables and log odds. If this condition isn’t met, the model’s predictions may not hold. Thus, it’s crucial to validate the model using techniques like cross-validation to assess its performance reliably. For those who want to dive deeper into predictive modeling, consider Applied Predictive Modeling. It’s a great guide for practical applications!

Key Points

– Overfitting and multicollinearity are common challenges.

– Regularization techniques and variable adjustments can help.

– Model validation is critical to ensure reliable predictions.

Conclusion

Logistic regression is vital in data analysis. It helps us understand relationships between variables and predict binary outcomes. This model is widely used in various fields, including healthcare, finance, and marketing. Its ability to provide probabilities enables organizations to make informed decisions.

In healthcare, for example, logistic regression predicts disease risk based on patient data. In finance, it assesses loan applications by determining the likelihood of default. By understanding these predictions, professionals can develop effective strategies tailored to their needs. If you’re looking for a great read on data science applications, check out Data Science for Business: What You Need to Know about Data Mining and Data-Analytic Thinking. It’s a fantastic resource!

I encourage you to explore further resources on logistic regression. Implementing this powerful tool can enhance your projects. Consider enrolling in courses on advanced statistical methods and machine learning. The insights gained can significantly impact your decision-making processes.

FAQs

What is the main difference between logistic regression and linear regression?

Logistic regression predicts binary outcomes, while linear regression estimates continuous outcomes. In logistic regression, the dependent variable is dichotomous, limited to two values. Linear regression, on the other hand, can produce values beyond the 0-1 range, making it unsuitable for binary classification tasks.

Can logistic regression be used for multi-class classification?

Yes, logistic regression can handle multi-class classification through multinomial logistic regression. This approach models outcomes with three or more unordered categories. It evaluates how various independent variables influence the probability of each category.

What are some common applications of logistic regression?

Logistic regression is used in numerous fields. In healthcare, it predicts patient outcomes. In finance, it assesses credit risk. Marketing teams use it to analyze customer behavior and optimize strategies. Each application demonstrates the versatility of this statistical method.

What are the assumptions of logistic regression?

The key assumptions include: – The dependent variable must be binary. – Observations should be independent. – There should be a linear relationship between independent variables and log odds. Understanding these assumptions is essential for reliable results.

How do you interpret the odds ratio in logistic regression?

The odds ratio indicates how a unit change in an independent variable affects the odds of the outcome. For example, an odds ratio of 1.5 means the odds increase by 50% for each one-unit increase in the predictor. Conversely, an odds ratio of 0.5 indicates a 50% decrease in odds.

Please let us know what you think about our content by leaving a comment down below!

Thank you for reading till here 🙂

All images from Pexels