Introduction

Understanding the difference between parameters and statistics is crucial in the field of statistics. These terms are not just jargon; they form the backbone of data analysis and research. Without grasping these concepts, one might as well try to navigate a maze blindfolded—frustrating, right?

Let’s break it down! A parameter is a numerical value that characterizes an entire population. Think of it as the ultimate goal for statisticians. They aim to uncover these elusive numbers, but the tricky part is that parameters often remain unknown because measuring an entire population is, let’s face it, impractical.

On the other hand, a statistic refers to a numerical value calculated from a sample taken from the population. This means that while a statistic might offer a glimpse into a population’s characteristics, it’s just a piece of the puzzle. Kind of like peeking through a keyhole instead of walking through the door!

This distinction holds significant practical implications in research, data analysis, and decision-making processes. When researchers collect data, they often use samples to draw conclusions about a larger population. Understanding which values are parameters and which are statistics ensures that the inferences drawn are accurate and reliable. If you’re looking for a comprehensive resource on this topic, check out Statistics for Dummies—a delightful way to demystify the numbers!

Understanding the difference between parameters and statistics is crucial in the field of statistics. This knowledge is foundational for effective data analysis and research. an introduction to statistical learning with Python

Understanding Parameters and Statistics

What is a Parameter?

A parameter is a fixed numerical value that describes a characteristic of an entire population. It’s like the ultimate truth about a group of individuals. Parameters are often denoted using Greek letters, such as μ for mean or σ for standard deviation.

Parameters possess unique characteristics:

- Fixed Numerical Value: Unlike statistics, parameters do not change. They remain constant, representing the true value of the population.

- Whole Population Representation: A parameter encompasses all members of a population. For example, consider the average income of all households in a country. That number, if we could measure it, would be a parameter.

- Examples:

- The mean height of all students in a school.

- The average age of all participants in a marathon.

- The total revenue generated by all businesses in a city.

Parameters play a vital role in statistical analysis. They provide the benchmark against which sample statistics can be compared. However, since parameters are often unknown, statisticians rely on sample data to estimate these values, leading us to the next point.

What is a Statistic?

A statistic, on the other hand, is derived from a sample and can vary with different samples. Think of statistics as the enthusiastic sidekick to parameters. They help us estimate what the parameters might be, but they come with a caveat—they can change based on the sample used.

Characteristics of statistics include:

- Sample-Based: Statistics are calculated from a subset of the population. This means they are subject to variability. If you take multiple samples, the statistics derived from each could differ significantly.

- Variable: Unlike parameters, statistics can change. Every time you take a new sample, you could end up with a different statistic.

- Examples:

- The average height of a sample of 30 students from the same school.

- The mean income calculated from a random selection of households.

- The proportion of voters supporting a particular candidate based on a survey.

Statistics are essential for making inferences about populations based on sample data. They allow researchers to estimate parameters and facilitate hypothesis testing. However, it’s crucial to remember that statistics can vary, which introduces uncertainty into any conclusions drawn. To assist with your statistical calculations, consider grabbing a Graphing Calculator—because who doesn’t love a little tech support when crunching numbers?

Overall, the distinction between parameters and statistics is foundational in statistics. Parameters represent the whole, while statistics provide insights into parts. Recognizing this difference is key to successful data analysis and informed decision-making.

Key Differences Between Parameters and Statistics

Conceptual Differences

In the world of statistics, parameters and statistics dance a delicate tango. They may seem similar, but they each play unique roles in data analysis.

A parameter is a numerical value that describes a characteristic of a population. Imagine it as the ultimate goal for researchers. It represents the whole group, like the average height of every adult in a country. But here’s the catch: parameters often remain unknown. Why? Because measuring an entire population is about as practical as trying to catch smoke with your bare hands.

On the flip side, we have statistics, which are derived from a sample of the population. Think of statistics as the enthusiastic sidekick to parameters. They help us estimate what the parameters might be, but they come with a twist—they can change based on the sample used. If you take different samples, you might end up with different statistics. For instance, if you survey 100 people about their favorite pizza topping, that statistic might vary if you ask a different group of 100 people.

So, the relationship between parameters and statistics is like that of a parent and child. The parameter is the parent, holding the ultimate truth about the population. The statistic is the child, trying to mimic the parent’s traits but often falling short. Researchers use statistics to make educated guesses about parameters, understanding that these estimates come with a margin of error.

Understanding these conceptual differences is vital. It sets the stage for effective data analysis and informed decision-making. If you mistake a statistic for a parameter, you might find yourself lost in a sea of misleading conclusions. Keep this in mind: while parameters aim for the big picture, statistics offer a snapshot of the smaller pieces.

Practical Implications

Grasping the differences between parameters and statistics is crucial for anyone involved in research or data analysis. First off, using statistics to estimate parameters is a common practice. But why does this matter? Because the accuracy of your estimates hinges on knowing which is which.

In research, the goal is often to make inferences about a population using a sample. This is where the practical implications come into play. If you misidentify a statistic as a parameter, you might draw conclusions that are as shaky as a house of cards. For example, if a survey of college students reveals that 60% enjoy studying at coffee shops, that statistic might not accurately reflect the entire student population. It’s just a glimpse, a piece of the puzzle.

Moreover, understanding this distinction influences how you approach data collection and analysis. If you recognize that your statistic is just an estimate, you approach your conclusions with the appropriate level of caution. You might use confidence intervals or hypothesis testing to assess the reliability of your findings. This practice helps mitigate the risks of making sweeping generalizations based on potentially flawed data. And if you’re looking for a solid reference book, consider Naked Statistics: Stripping the Dread from the Data—it’ll make you chuckle while you learn!

In summary, the practical implications of distinguishing between parameters and statistics are immense. They guide researchers in making informed decisions, ensuring that conclusions drawn from data are both accurate and reliable. So, whether you’re analyzing customer satisfaction or studying public health, remember: knowing the difference can save you from a statistical misadventure.

Statistical Notation

Parameter Notation

Let’s talk symbols! In the realm of statistics, notation is like a secret language. Each symbol represents a different aspect of our data. When it comes to parameters, several common symbols take center stage.

The mean of a population is often denoted as μ (Greek letter mu). This little symbol holds a lot of power, representing the average value of a characteristic across the entire population. For example, if you wanted to express the average income of all households in a city, you’d use μ.

Next up is the standard deviation, represented by σ (Greek letter sigma). This symbol indicates the variability of the entire population. Understanding σ helps researchers grasp how much individual data points differ from the mean.

Finally, we have P, which denotes the population proportion. This symbol comes into play when researchers want to express how many individuals in a population possess a particular characteristic.

These symbols are essential for clear communication among statisticians. They help convey complex ideas simply and effectively. So, the next time you encounter these symbols, remember that they represent the backbone of parameter notation. Understanding them is key to mastering the art of statistics.

Statistic Notation

In the world of statistics, notation is crucial for conveying information efficiently. Each symbol carries specific meanings, making it easier for statisticians to communicate complex ideas.

Let’s start with the sample mean, denoted as x̄ (pronounced “x-bar”). This symbol represents the average value calculated from a subset of data. For instance, if you survey 50 people about their favorite pizza topping, the average number of toppings they choose is x̄.

Next up, we have the sample standard deviation, represented by s. This symbol measures the amount of variation or dispersion in a set of sample data. Think of it as the statistician’s way of saying, “Hey, not everyone loves pineapple on their pizza!”

Another important symbol is p̂ (pronounced “p-hat”), which represents the sample proportion. This symbol is used when you’re interested in the fraction of a sample that exhibits a certain characteristic. For example, if you survey 200 people and find that 60 like deep-dish pizza, then p̂ would be 0.3 (or 30%).

Additionally, we use n to denote the sample size. This number tells us how many observations are included in the sample. A larger n typically leads to more reliable statistics, much like how a bigger pizza means more slices for everyone!

These notations help statisticians articulate their findings clearly. Using these symbols allows for a concise representation of complex ideas, making it easier to compare results across studies.

Examples Illustrating Parameters vs. Statistics

Real-Life Examples

Understanding the difference between parameters and statistics is essential for grasping how data shapes our world. Let’s break it down with some real-life examples.

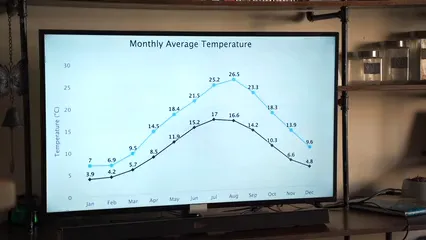

One classic scenario involves national surveys. Suppose a survey reports that the average income of households in the United States is $70,000. This figure represents a parameter because it describes the entire population of households. However, conducting a survey of every household is nearly impossible, so researchers instead sample a portion. If they survey just 1,000 households and find that their average income is $68,000, this number is a statistic. It provides a snapshot of a subset of the population rather than the whole picture.

Another example can be found in healthcare. Imagine a health study examining blood pressure among adults. The parameter might state that the average blood pressure of all adults in a country is 120/80 mmHg. To estimate this, researchers might survey 500 adults, resulting in a statistic of 125/82 mmHg. While the statistic offers valuable insight, it doesn’t replace the parameter.

Now consider a sports context. The parameter could be the average score of all teams in a league throughout the season. Say it’s 75 points per game. However, if a researcher looks at just one game and finds the average score there is 70 points, that’s a statistic. It reflects only a limited view of the larger picture.

These examples illustrate how parameters provide overarching truths about populations, while statistics offer estimates based on samples. Understanding this distinction is critical for interpreting data accurately, whether you’re analyzing public opinion, health data, or sports performance. To learn more about statistical record keeping techniques, check out examples of statistical record keeping techniques.

These examples illustrate how parameters provide overarching truths about populations, while statistics offer estimates based on samples. Learn more about statistical record keeping techniques for better data interpretation.

Comparison Table

To further clarify the differences between parameters and statistics, here’s a handy comparison table:

| Feature | Parameter | Statistic |

|---|---|---|

| Definition | A numerical value describing the entire population | A numerical value derived from a sample |

| Example | Average income of all households in a country | Average income from a sample of households |

| Notation | Often denoted with Greek letters (e.g., μ for mean) | Typically denoted with Latin letters (e.g., x̄ for mean) |

| Variability | Fixed and constant for the population | Can vary based on the sample |

| Use | Provides a complete view of the population | Estimates characteristics of the population |

| Collection | Requires surveying the entire population | Obtained through sampling |

This table emphasizes the key distinctions, helping readers quickly grasp the differences between parameters and statistics. By understanding these concepts, researchers can make more informed decisions in their analyses, ensuring that conclusions drawn are both valid and reliable.

Estimating Population Parameters Using Statistics

Importance of Sampling

When it comes to estimating population parameters, sampling is not just important—it’s essential! Think of it as the shortcut through a long, winding road. Instead of surveying every single individual in a population, researchers rely on samples to gather data. This approach saves time, money, and resources while still providing valuable insights.

But what makes a good sample? Random sampling is key. This technique ensures that every member of the population has an equal chance of being selected. Imagine a bag of jellybeans; if you want to know the average flavor preference, you wouldn’t just pick the red ones, right? By randomly selecting, you’re more likely to get a mix that represents the entire bag.

Now, let’s talk about representative samples. A representative sample mirrors the characteristics of the larger population. This means that if your population has 60% women and 40% men, your sample should reflect that ratio. Failing to do so might lead to skewed results. After all, if you only survey a group of marathon runners to gauge the average height of all adults, you might end up with a tall bias!

Sampling also allows researchers to make inferences about the population. When data is collected from a representative sample, it opens the door to statistical analysis. Researchers can use this data to estimate population parameters, such as means or proportions. For instance, if a sample reveals that 70% of people prefer chocolate ice cream, researchers can reasonably infer that the majority of the entire population feels the same way. To further enhance your data analysis experience, consider using Statistical Analysis Software—it’s like having a personal assistant for your data!

Moreover, sampling reduces the burden of data collection. It’s impractical to survey an entire population, especially when it comes to massive groups like all citizens of a country. In such cases, a well-designed sample can yield insights that are both efficient and effective, encapsulating the essence of the population.

In conclusion, sampling is a cornerstone of statistical research. It enables researchers to gather meaningful data without the headaches of surveying everyone. By utilizing random and representative samples, they can draw valid conclusions about population parameters, making research not just feasible, but also reliable.

Statistical Inference

Statistical inference is where the magic happens. This process uses sample statistics to estimate population parameters. Essentially, it’s the bridge connecting our limited view of a sample to the vast ocean of the population.

So, what exactly is statistical inference? It involves making educated guesses about a population based on the data collected from a sample. This means researchers can use sample results to form conclusions about the entire population, even if they haven’t collected data from every individual.

One crucial aspect of statistical inference is the confidence interval. This range provides an estimate of where the true population parameter lies. For example, if a sample of students shows an average height of 5’6” with a confidence interval of 5’5” to 5’7”, we can be fairly confident that the true average height of all students falls within that range. It’s like peeking behind the curtain—you’re not just taking a shot in the dark! To learn more about how to calculate test statistics for confidence intervals, check out how to calculate test statistic for confidence interval.

Statistical inference is where the magic happens. It allows researchers to make educated guesses about a population based on sample data. Learn how to calculate test statistics for confidence intervals for better estimations.

Another important technique is hypothesis testing. This method allows researchers to test assumptions about a population. Let’s say a new teaching method claims to improve student performance. Researchers can use hypothesis testing to determine if there’s enough evidence to support this claim. By comparing sample data against a null hypothesis (the assumption that there is no effect), they can conclude whether the teaching method is effective.

Statistical inference not only enhances our understanding of data but also allows for decision-making based on evidence. For example, companies use sampling and inference to gauge customer satisfaction or product quality. Instead of asking every customer for feedback, they can survey a representative group and generalize the results to the entire customer base.

In summary, statistical inference is a powerful tool that transforms sample data into meaningful insights about populations. Through confidence intervals and hypothesis testing, researchers can draw valid conclusions, guiding decisions and enhancing our understanding of the world.

Identifying Parameters and Statistics in Research

Key Considerations

When researchers embark on the journey of data analysis, distinguishing between a parameter and a statistic is crucial. But how do you know which is which? Here are some key considerations to help you navigate this distinction.

First, consider the source of the data. If the value represents the entire population, it’s a parameter. For instance, if you know the average height of everyone in your city, that’s a parameter. However, if you only have the average height from a sample of 100 residents, that’s a statistic. Remember, parameters are like the ultimate truth, while statistics are your best guess based on a piece of the puzzle.

Next, think about the size of the group. Parameters are often fixed values that describe whole populations. If you’re studying a small group, chances are you’re dealing with statistics. For example, if you conduct a survey of 50 employees in a company about their job satisfaction, the results you collect are statistics since they derive from a sample rather than the entire company.

Another consideration is feasibility. If measuring the entire population is impractical or impossible, you’re likely looking at a statistic. Take the average income of all citizens in a country—good luck gathering that data! Instead, researchers rely on sample statistics to make inferences about the population.

Also, check the context of the study. If the research emphasizes the need for accuracy regarding the entire population, it’s probably focusing on parameters. Conversely, if the study is more exploratory and relies on sampling techniques, statistics are in play.

Finally, consider the notation. Parameters typically use Greek letters, such as μ for the mean or σ for the standard deviation. On the other hand, statistics often use Latin letters, like x̄ for the sample mean and s for the sample standard deviation. This convention helps researchers quickly identify which values they’re working with.

In conclusion, recognizing whether a value is a parameter or a statistic hinges on these key considerations. By examining the source, size, feasibility, context, and notation, researchers can navigate the waters of data analysis with confidence. Understanding this distinction is essential for making informed decisions and drawing accurate conclusions in research.

Common Mistakes to Avoid

Navigating the waters of statistics can be treacherous, especially when it comes to distinguishing between parameters and statistics. Even seasoned researchers sometimes trip over these two concepts. So, let’s shine a light on some common pitfalls to help you avoid sinking in a sea of confusion.

- Confusing Definitions One of the biggest blunders is mixing up the definitions of a parameter and a statistic. A parameter describes an entire population, while a statistic is derived from a sample. It’s like mistaking a full pizza for a single slice. Always remember: parameters are the whole pie, and statistics are just a piece of it!

- Ignoring Sample Size Not considering the size of your sample can lead to misleading conclusions. When researchers take a small sample, the statistic can vary significantly. This sampling error can produce a statistic that doesn’t accurately reflect the parameter. So, be sure your sample is representative—don’t just grab the first 10 people you see at the coffee shop!

- Overgeneralizing Findings Another common mistake is overgeneralizing findings from a statistic to a whole population. Just because 70% of your sample prefers chocolate ice cream doesn’t mean all people love it! Always approach sample results with caution, as they cannot fully represent the entire population.

- Misinterpreting Statistical Notation Statistical notation can feel like a foreign language. A common error is misreading symbols. For instance, using \( \mu \) (the population mean) instead of \( \bar{x} \) (the sample mean) can lead to significant misunderstandings. Familiarize yourself with the notation, or you might end up reporting the wrong numbers—yikes!

- Neglecting Context Statistics don’t exist in a vacuum. Ignoring the context behind the data can lead to erroneous interpretations. For example, saying “50% of respondents favor a policy” lacks clarity without knowing who the respondents were. Always provide context to your statistics for a clearer picture!

- Assuming Parameters are Always Known Researchers often fall into the trap of thinking they can always know the parameter. In reality, parameters are usually unknown and can only be estimated using statistics. Don’t assume you know the whole story just because you gathered some data.

- Failing to Acknowledge Variability Statistics can vary widely depending on the sample taken, but some researchers overlook this variability. Failing to account for it can lead to overconfidence in results. Always include measures of variability, like confidence intervals, to represent the uncertainty inherent in your estimates.

By steering clear of these common mistakes, you’ll be better equipped to understand the difference between parameters and statistics. This clarity will enhance your research, ensuring that your conclusions are both accurate and reliable. Remember, in the world of statistics, a little caution goes a long way!

Conclusion

Understanding the difference between parameters and statistics is essential for anyone engaged in research and data analysis. Parameters, the numerical values that describe an entire population, offer fixed insights that are often difficult to obtain directly. In contrast, statistics, which are derived from samples, provide estimates that can vary based on the data collected. This fundamental distinction is not just academic; it has real-world implications.

To summarize, parameters represent the whole, while statistics offer a glimpse into parts of that whole. Researchers frequently aim to infer parameters from statistics, making it crucial to appreciate both concepts. Misunderstanding these terms can lead to significant errors in interpretation, which can skew results or mislead decision-making processes.

Moreover, the implications of confusing parameters with statistics can ripple through a study. For example, if a researcher mistakenly treats a statistic as a parameter, they might draw conclusions that misrepresent the true characteristics of a population. This can lead to misguided policies or actions based on flawed data interpretations.

In practical applications, recognizing the importance of sampling is key. Reliable statistics can provide accurate estimations of parameters, especially when samples are appropriately randomized and representative. Understanding sampling error and variability allows researchers to communicate their findings effectively and with the necessary caution.

In conclusion, grasping the difference between parameters and statistics is not just an academic exercise; it is a vital skill for effective research. It empowers researchers to make informed decisions, enhances the credibility of their work, and ensures that data-driven insights are both valid and actionable. So, whether you’re analyzing survey results, testing hypotheses, or making data-driven decisions, keep these distinctions in mind for more robust research outcomes. And hey, don’t forget to stay organized with a Notebook for Data Analysis—because who likes chaos in their data!

FAQs

What is an example of a parameter?

A classic example of a parameter is the mean income of all households in a given country. This value summarizes the entire population’s income characteristics.

How do statistics help in estimating parameters?

Statistics derived from sample data allow researchers to make educated guesses about population parameters. By analyzing sample means, proportions, and variances, statisticians can estimate what the corresponding population parameters might be.

Are statistics always less reliable than parameters?

Not necessarily! While parameters provide exact values, statistics can still be reliable estimates if drawn from a well-designed sample. The reliability often hinges on the sample size and representativeness.

Can a statistic ever be a parameter?

Yes! If a statistic is calculated from an entire population, it is technically a parameter. For instance, if a survey collects data from every individual in a group, the resulting mean is both a statistic and a parameter.

Why is sampling important in research?

Sampling is crucial because it’s often impractical to collect data from an entire population. Well-designed samples enable researchers to make valid inferences about population parameters, saving time and resources while still providing valuable insights.

Please let us know what you think about our content by leaving a comment down below!

Thank you for reading till here 🙂

All images from Pexels